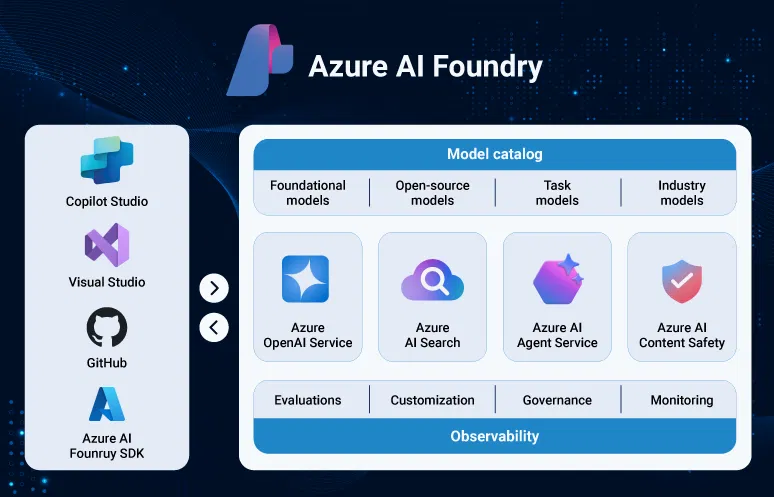

Azure AI Foundry is a unified web-based platform developed by Microsoft to build, deploy, and manage AI applications with ease. It was formerly known as Azure AI Studio.

Microsoft rebranded and relaunched it after augmenting its capabilities, embedding it more within the Azure platform, and positioning it as a single platform for building, governing, and scaling AI solutions. Azure AI Foundry makes the entire life cycle of AI more efficient, right from data setup to model deployment.

This blog explores its architecture, role in the Azure ecosystem, and why it stands out for large enterprises, while also looking ahead at its potential in driving the next wave of serverless AI. It will also cover the features and functions of Azure AI Foundry, and how it can help your business realize its full AI potential.

What is Azure AI Foundry?

Microsoft Azure AI Foundry is a unified platform that gives a shared environment for developing, training, deploying, and managing machine learning models. It is the central part in the Microsoft Azure cloud platform, utilizing Azure’s powerful infrastructure and services.

This arrangement enables Azure AI Foundry to tap into Azure’s scalability, security, and global reach. It employs Azure’s compute, storage, and networking capabilities to maximize the process of AI development. It also provides several tools and services through a single interface which simplifies AI development.

What are the key features of Azure AI Foundry?

Azure AI Foundry has various features that push businesses to think beyond traditional AI adoption. They help drive faster innovation cycles and support teams to respond quickly to new opportunities.

-

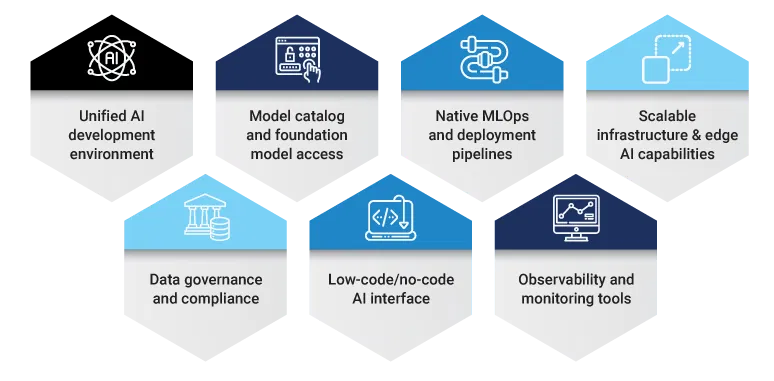

Unified AI development environment

Azure AI Foundry provides a unified experience throughout SDKs, CLI tools, and a web-based portal. It includes support for collaborative development through built-in version control, reusable assets, and notebook environments. This helps in appropriate team collaboration and model lifecycle management.

-

Model catalog and foundation model access

The platform has a systematically assembled library of base models from OpenAI, Hugging Face, Meta, and Mistral. It allows users to fine-tune these models or create their own models using the existing tools. This makes it simpler for organizations to customize AI solutions according to their business requirements.

-

Native MLOps and deployment pipelines

Azure AI Foundry has native MLOps workflow support, with automated model training, testing, deployment, and monitoring. It supports CI/CD for AI models by integrating with GitHub and Azure DevOps.

-

Scalable infrastructure and edge AI capabilities

It supports deployment at scale in cloud, on-premises, or at the edge. Edge AI capability supports processing of real-time data even under low-connectivity scenarios, benefitting IoT and predictive maintenance use cases.

-

Data governance and compliance

Enterprise security in Azure AI Foundry involves native features such as role-based access control, encryption, and audit trails. It supports global compliance, such as GDPR and HIPAA, to provide accountability and trust.

-

Low-code/no-code AI interface

Its easy-to-use visual tools help non-technical users to contribute to AI projects. Drag-and-drop interfaces and templates reduce the effort needed to prototype quickly without extensive coding skills.

-

Observability and monitoring tools

Monitoring tools assist in supervising model performance, flagging drift, and examining outcomes in real time. Such visibility ensures models work as expected in production and deliver business goals.

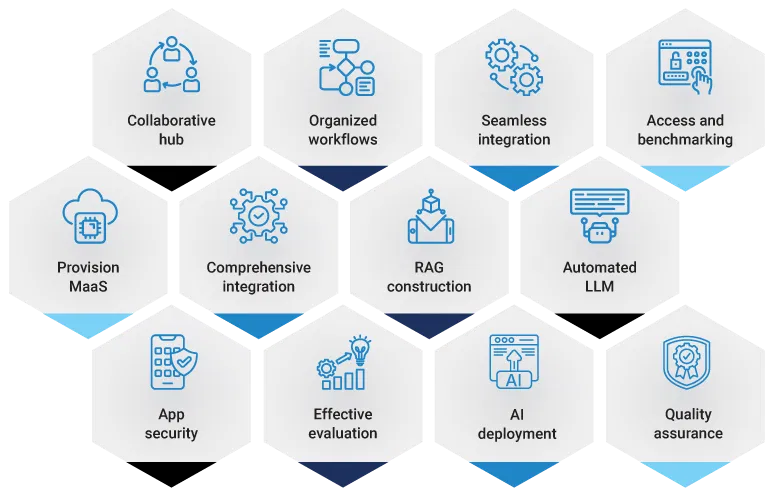

Key capabilities of the Azure AI Foundry portal

The Azure AI Foundry portal acts like a bridge between people, data, and technology. The perspective to keep in mind is how this central hub can reduce complexity and make AI a more natural part of everyday business operations.

-

Collaborative hub

Work together as a single team. Your Azure AI Foundry portal hub offers enterprise-level security and a shared environment with common resources and access to pretrained models, data, and compute.

-

Organized workflows

Save state to iterate from initial idea to initial prototype, and then initial production deployment. Also, invite others to work with you along the way easily.

-

Seamless integration

Work using your chosen development platform and frameworks, such as GitHub, Visual Studio Code, LangChain, Semantic Kernel, AutoGen, and more.

-

Access and benchmarking

The platform includes a model catalog with 1,600+ models to explore and benchmark for generative AI applications, along with secure data integration tools, model customization, and enterprise-level governance.

-

Provision MaaS

Deploy Models-as-a-Service (MaaS) via serverless APIs and hosted fine-tuning. This helps to make AI accessible without heavy infrastructure setup.

-

Comprehensive integration

Integrate multiple models, data sources, and modalities to create richer AI solutions.

-

RAG construction

Build Retrieval-Augmented Generation (RAG) from your secured enterprise data without fine-tuning.

-

Automated LLM

Simplify prompt design and manage large language model workflows with built-in orchestration tools.

-

App security

Protect applications and APIs using flexible filters, controls, and enterprise-grade safeguards.

-

Effective evaluation

Measure and refine model performance with integrated evaluation flows tailored to your needs.

-

AI deployment

Deploy AI inventions to Azure-managed infrastructure with ongoing monitoring and governance across environments to keep them running smoothly.

-

Quality assurance

Regularly check deployed apps for production safety, quality, and token usage to maintain production-ready standards.

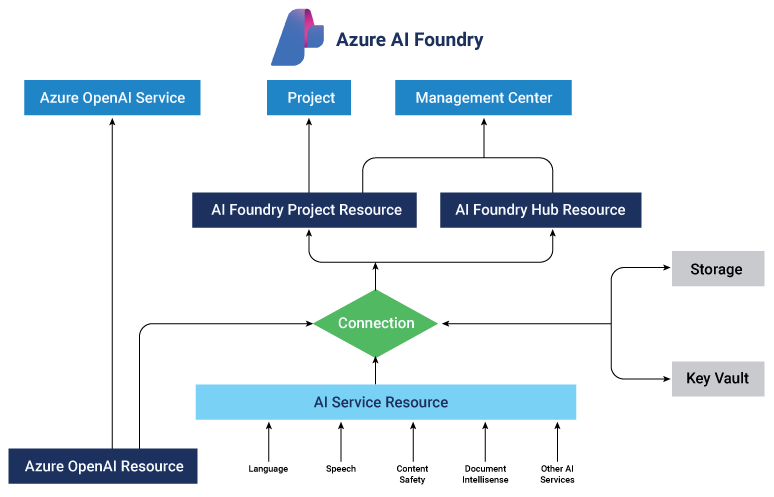

Azure AI Foundry architecture overview

Azure AI Foundry architecture is designed with the emphasis on simplifying the entire process of AI development from data ingestion and experimentation to deployment and monitoring. It integrates various Microsoft Azure services and platforms to deliver scalable, secure, and reliable AI solutions.

-

Azure OpenAI

Azure OpenAI offers access to capability-rich generative AI models like GPT and Codex running on Microsoft Azure. It is the basis for developing smart applications that encompass natural language understanding, content creation, code development, and summarization. Azure OpenAI makes it possible for businesses to tap these large language models in a compliant, secure, and scalable cloud platform.

Developers can consume REST APIs or embed these models in custom applications using Azure SDKs. Security, governance, and responsible AI controls are integrated, providing enterprise-level reliability. Azure OpenAI is widely adopted in copilots, intelligent search, chatbots, and automation workflows.

-

Management Centre

Management Centre is the command-and-control hub for all AI resources, configurations, and user access in the Foundry ecosystem. It provides a single, centralized dashboard to manage infrastructure, model deployments, user roles, billing, and compliance monitoring.

It gives insights to managers into performance data, cost-optimization techniques, and AI management policies. The Management Centre centralizes controls to ensure consistency, transparency, and accountability across AI development teams.

-

Azure AI Foundry Hub

Azure AI Foundry Hub is the project management layer and the collaborative workspace of the architecture. It enables cross-functional teams such as data scientists, ML engineers, and software developers to begin, co-create, and monitor AI projects. It provides access to reusable templates, pre-configured compute environments, datasets, and tools required to accelerate experimentation and prototyping.

The Hub also allows for integration with GitHub and Azure DevOps to enable teams to incorporate DevOps and MLOps best practices. Its purpose is to break silos across various team members and make AI development more agile and traceable.

-

Azure AI Foundry Project

An Azure AI Foundry Project is a focused environment for one particular AI solution or application. Users can set up data ingestion pipelines, training workflows for models, and deployment objectives within every project. It contains all resources, such as data sources, notebooks, trained models, compute instances, and logs, in one secluded and controlled environment.

Projects also enable CI/CD pipelines and make it simple to clone, version, and audit. They are architected for reusability and accelerate the path from prototype to production.

-

Connections

Connections are the trusted associations made between Azure AI Foundry and data sources (internal or external), APIs, services, and compute environments. They are necessary to retrieve datasets from enterprise systems, third-party APIs, or other Azure services such as Azure Storage, Azure SQL Database, and Azure Event Hubs.

Each connection is regulated by authentication, data access control, encryption, and monitoring policies. Connections enable seamless data flow and integration between various components of the AI solution, such as real-time and batch processing pipelines.

Use cases of Azure AI Foundry

The strength of Azure AI Foundry lies in how versatile it can be across industries. Use cases are entry points to explore what’s possible for your business. They are doorways to reimagining processes in ways you might not have thought of yet.

-

Improving customer experience with AI

Azure AI Foundry provides capabilities, including Azure Cognitive Services and pre-trained models, that help in delivering personalized customer experiences and pre-emptive support. For instance, the Text Analytics API can be utilized for sentiment analysis to perceive customer feedback, and the Computer Vision API can be used to personalize product recommendations based on visual picks. Chatbots based on Azure OpenAI models provide real-time personalized customer service or customer information to deliver tailored recommendations. It helps increase customer satisfaction.

-

Optimizing business operations with AI

Azure AI Foundry enables automation, process optimization, and efficiency improvement with tools like Azure Machine Learning and DevOps integration. Applications comprise the deployment of machine learning-driven models to automatically detect fraud by examining patterns of transactions or forecast. Also, it automates supply chain operations using predictive analytics to reduce disruptions and forecast demand. Invoice processing automation using document recognition API helps reduce efforts and errors.

-

Driving innovation with AI-driven solutions

Azure AI Foundry allows innovation by utilizing pre-existing templates, models, and tools to build new products and services. Capabilities such as Retrieval-Augmented Generation (RAG) allow the development of industry-specific solutions by combining proprietary data with large language models, and fine-tuning models allow for the development of highly specialized applications. This facilitates the rapid prototyping and deployment of innovative Azure AI Foundry solutions tailored to specific industry demands by businesses.

The future of Azure AI Foundry

The future of AI is about ecosystems that keep adapting. Azure AI Foundry is moving toward that future with a focus on agility and serverless AI. For new-age companies, the takeaway is that AI is not only today’s solution but a platform to grow with tomorrow’s dynamic demands.

With enterprise features and forward-looking innovations, Azure AI Foundry promises to define the future of AI development. With more and more businesses embracing serverless AI, the ecosystem is simply developing to enable them to remain competitive in a dynamic environment.

| Category | Focus area | Benefits |

|---|---|---|

| Innovations | Advanced AI model features | Future releases include tools for training and deploying models more efficiently, with deeper support for fine-tuning pre-trained models to suit industry requirements. |

| Integration with emerging technologies | Quantum AI: Microsoft and IBM’s quantum computing efforts will utilize Azure AI Foundry to leverage its powerful computational skills, including optimization and cryptography. Edge AI: It will improve support of edge computing, placing AI models close to data source and reducing latency for real-time applications such as predictive maintenance and autonomous systems. |

|

| Enhanced observability tools | Future releases will provide even better granular visibility into model performance, usage of resources, and cost monitoring, allowing organizations to better optimize their AI investments. | |

| Future-proofing businesses | Adapting to market demands | With changing industries, Azure AI Foundry’s adaptability allows companies to adopt new technologies and expand operations seamlessly. Its powerful multi-cloud and hybrid features guarantee that businesses can thrive across varied operating conditions. |

| Driving innovation with Serverless AI | Serverless architecture enables teams not to waste time on infrastructure building or maintenance; rather, they must concentrate on innovating and developing their product. Companies can deploy more quickly and more effectively to outcompete their competitors through the utilization of AI solutions. |

Drive business transformation with Azure AI Foundry

The speed of AI innovation requires platforms that are not just capable but also versatile to the unanticipated challenges of the future. Azure AI Foundry provides businesses with the agility to test, the scale to expand, and the assurance to bring AI into core mission work.

Every enterprise faces a choice: wait for the future to arrive or start shaping it today. Don’t let innovation remain an idea, turn it into action. Azure AI Foundry is built to help you scale what works, evolve what’s next, and deliver impact where it matters most. Whether you’re refining existing systems or expanding into new possibilities, it’s your launchpad for meaningful, enterprise-ready AI.