The Model Context Protocol (MCP) is an open standard that standardizes the way AI applications (chatbots, IDE assistants, or custom agents) communicate with external tools, data sources, and systems.

MCP acts as a universal translator that supports seamless interaction between language models and different systems that contain important information.

Anthropic, an AI safety and research company known for building advanced language models, developed MCP and made it available as an open-source project. It solves the basic challenge of granting AI models reliable, secure access to the data they require, where and when they require it, wherever that data resides.

The origin and adoption of Model Context Protocol (MCP)

Anthropic came up with the concept of Model Context Protocol (MCP) in November 2024. It began as an open-source project to enhance the way language models engage with data and tools.

Since its origin MCP has attracted a lot of interest. It began with developers creating internal tools for document search and code completion. That interest soon increased, with bigger firms applying MCP to production environments.

By early 2025, support for MCP spread throughout the tech world. OpenAI and Google DeepMind, two top AI research organizations, made their systems compatible with the protocol.

At about the same time, Microsoft made tools for developers to use MCP more conveniently, such as support for popular Microsoft products like Copilot Studio, helping companies in creating AI assistants, and Visual Studio Code, one of the most used code editors.

Key components of Model Context Protocol (MCP)

To understand how MCP adds intelligence to AI systems, it’s important to look at the parts that make it work. These building blocks help AI hold context, connect information, and respond more effectively. Once you understand its components, you will know how to apply MCP in your use cases.

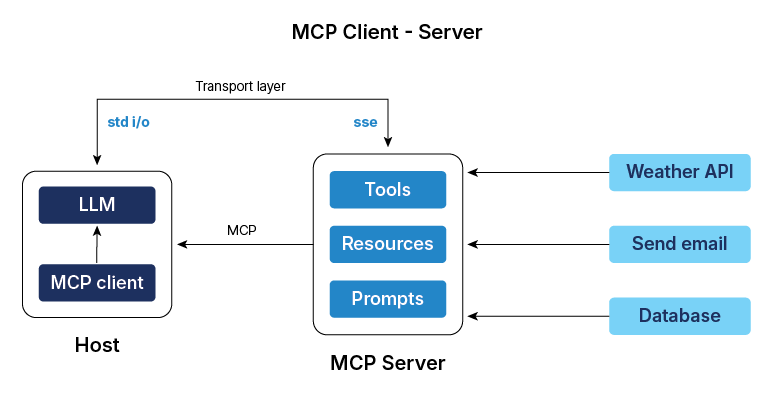

Hosts: These are programs that individuals directly engage with like chatbots, IDEs, and custom AI agents. For users, hosts are interfaces by which they access data, ask questions and receive responses. The host may load several MCP clients to connect to various services in the background, but this intricacy stays hidden.

Clients: The clients are the middle layer within the host application. Each client only deals with exactly one MCP server. If a host needs to communicate with multiple servers, it will create three individual clients – one for GitHub, one for Slack, one for Torii. This one-to-one mapping guarantees clear connections and makes data flow easier.

Servers: Servers are the external programs that present capabilities to AI. The server “wraps” the system or service provider (such as GitHub, Slack, or Torii) and converts its functionality into a standardized MCP form. The server is the mediator between AI and systems that provide a uniform structure, so the host knows precisely how to question the existing tools, read information, or ask the server irrespective of the service behind it.

How does MCP work?

MCP might sound technical, but its working process is based on a clear goal, making AI understand more like humans do. Explore how context moves through a system and why that matters for real-world use.

Initialization: The host application creates N number of MCP clients when it starts. It interchanges information about capabilities and protocol versions through a handshake.

Discovery: The clients ask for the capabilities (tools, resources, prompts) provided by the server. The server answers with a list and descriptions.

Context provision: The host application makes resources and prompts available to the user, or the tools parsed into an LLM friendly format, e.g. JSON Function calling.

Invocation: If the LLM recognizes that it must use a tool (e.g., as per the user’s query such as “What are open issues in the ‘X’ repo?”), the host instructs the client to make an invocation request to the relevant server.

Execution: The server gets the request (e.g., fetch_github_issues with repo ‘X’), performs the underlying computation (calls the GitHub API), and obtains the result.

Response: The server returns the result to the client.

Completion: The client reports the result back to the host, which integrates it with the context of the LLM so that the LLM can produce a final answer for the user from the new, external knowledge.

How MCP stands apart from other traditional AI integrations

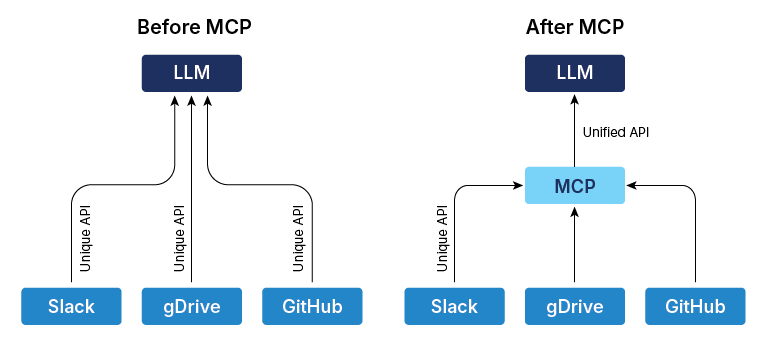

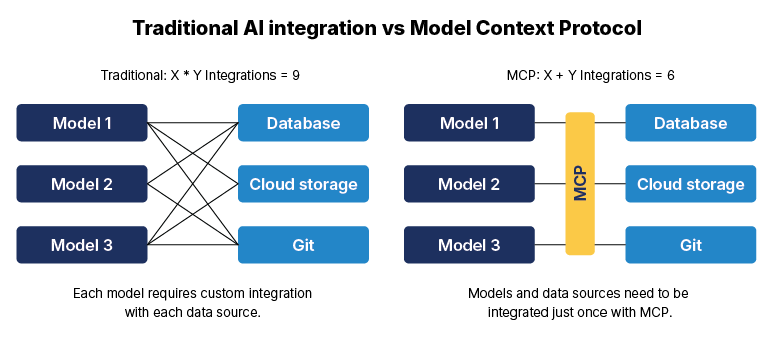

Prior to MCP, integrating AI systems with third-party tools was extremely difficult. That’s because a custom connection was required for every data source. Thus, for ‘X’ various AI applications and ‘Y’ various tools, developers had to create X*Y integrations. This resulted in redundant effort and inconsistent implementations.

MCP redefines this method completely. Rather than custom integrations per AI-tool pair, MCP offers one standardized protocol. This reduces the integration issue to an ‘X+Y’ situation. Application developers are tasked with constructing X MCP clients (one for each AI application), whereas tool developers construct Y MCP servers (one for each system).

Conventional integration techniques such as REST APIs in general:

- Require preconfigured connection points

- Are unable to respond in real time

- Struggle with complex system interactions

- Require a lot of maintenance

With MCP, AI models can automatically discover available tools, define interaction rules in real-time, and maintain context across interactions. It also supports real-time bidirectional communication, allowing models to both fetch data and invoke actions.

Real-world examples: How businesses are using MCP

Businesses using MCP models are gaining a competitive edge by making AI systems more adaptive and aware. With deeper context handling, MCP is helping organizations reduce errors, streamline decisions, and deliver more intelligent user experiences across their operations.

- Block and Apollo

Block and Apollo are using MCP to integrate their AI assistants with internal corporate tools, think databases, ticketing systems, customer profiles, and more. This allows their AI to do more than talk, it can act, fetch live data, and assist teams in making decisions quicker, all within safe company ecosystems.

- Replit and Codeium

Platforms such as Replit and Codeium are using MCP to enhance their coding environments. Wiring the AI directly into live code tools means that users can request assistance, execute code, debug, or receive file-based suggestions without ever having to leave their dev environment.

- Copilot Studio (Microsoft)

Microsoft’s Copilot Studio has integrated MCP to simplify the wiring of AI agents into business applications such as Dynamics 365, Office, and Teams. Rather than having to code each integration manually, MCP makes it plug-and-play, and AI assistants can network with data sources or initiate workflows without engineers having to write a custom connector every time.

Why now is the right time to embrace MCP?

The way AI interacts with data, users, and real-world problems is changing and Model Context Protocol (MCP) is leading that shift. It’s no longer enough for AI systems to provide one-off responses or isolated insights. To utilize the power of intelligent automation, businesses and innovators need models that think across time, context, and use cases. That’s what MCP delivers.

Whether you’re building smarter applications or improving decision-making, now is the time to explore what MCP models can do for you.