As enterprises move deeper into AI transformation, two disciplines have started making regular appearances in boardrooms and strategy decks: AIOps and MLOps. Both sound similar, both use machine learning, and both are designed to simplify complexity.

But while they share DNA, they were built to solve different challenges. They take different approaches to solving operational challenges in today’s increasingly AI-driven environments. AIOps focuses on automating and stabilizing infrastructure, while MLOps is about managing and optimizing the lifecycle of machine learning workflows.

Getting the most out of either often depends on having the right expertise in place, especially when it comes to scaling MLOps frameworks and consulting-led initiatives across real business environments.

In this blog post, we’ll explore how AIOps and MLOps differ, where they intersect, and why choosing between them isn’t always necessary because in practice, the two often work better together.

What is AIOps?

It refers to the use of artificial intelligence techniques to automate and enhance IT operations. By applying machine learning to data generated from infrastructure and software systems, AIOps helps teams respond more quickly and efficiently to issues.

AIOps makes it easier for IT teams to keep up with today’s complex systems. It brings together data from various sources such as servers, apps, and monitoring tools, and applies machine learning to help catch problems early.

Instead of flipping between dashboards or reacting to every notification, AIOps helps teams zero in on the issues that actually need attention. It highlights the real problems, often before they escalate, and in some cases, can even kick off automated steps to fix them. This results in fewer disruptions, better uptime, and more breathing room to focus on long-term improvements—not just putting out fires.

What is MLOps?

Short for machine learning operations, MLOps refers to a set of practices and tools that help organizations deploy, monitor, and manage machine learning models at scale. MLOps bridges the gap between development and operations, ensuring that models continue to perform well after deployment.

MLOps is about making sure ML models don’t just work in a lab but actually deliver value in the real world. It helps teams manage the full lifecycle from training and testing to deployment and monitoring, so models stay reliable long after they go live. This makes it an essential part of any successful machine learning strategy.

By bringing together practices from DevOps, data engineering, and AI, MLOps makes sure that good ideas don’t get stuck in digital idea buckets, instead they become working solutions that stay accurate, secure, and useful over time.

When comparing AIOps vs MLOps, it’s important to note that MLOps focuses more on model lifecycle management, while AIOps is built around IT automation and system health.

How AIOps vs MLOps manage complexity

| Aspect | AIOps | MLOps |

|---|---|---|

| Primary focus | Infrastructure and operations management | Machine learning model lifecycle management |

| Data handled | Logs, metrics, events, alerts from IT systems | Structured/unstructured datasets used for model training and prediction |

| Core function | Filters noise, correlates event, applies ML to detect and respond to anomalies | Automates the flow from data prep → training → deployment → monitoring and retraining |

| Automation targets | Incident detection, root cause analysis, and remediation workflows | Model deployment, performance tracking, and automatic retraining |

| End goal | Stable, self-healing IT environments | Reliable, scalable, and continuously improving machine learning systems |

Together, AIOps and MLOps create a powerful operational framework. AIOps helps keep systems stable and responsive, while MLOps ensures that AI solutions remain functional, fair, and efficient over time.

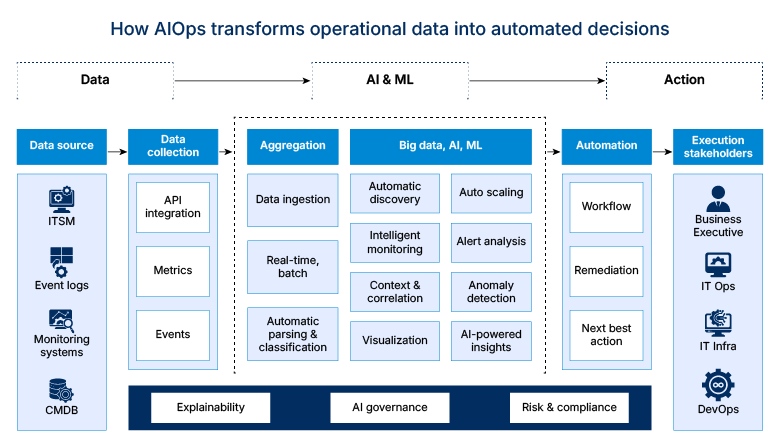

Understanding how AIOps drives action:

This visual illustrates how AIOps connects data sources like ITSM, monitoring tools, and event logs with AI models that drive intelligent automation.

From aggregation and anomaly detection to workflow automation and executive insights, it showcases how operational data turns into practical, decision-ready insights that drive real outcomes.

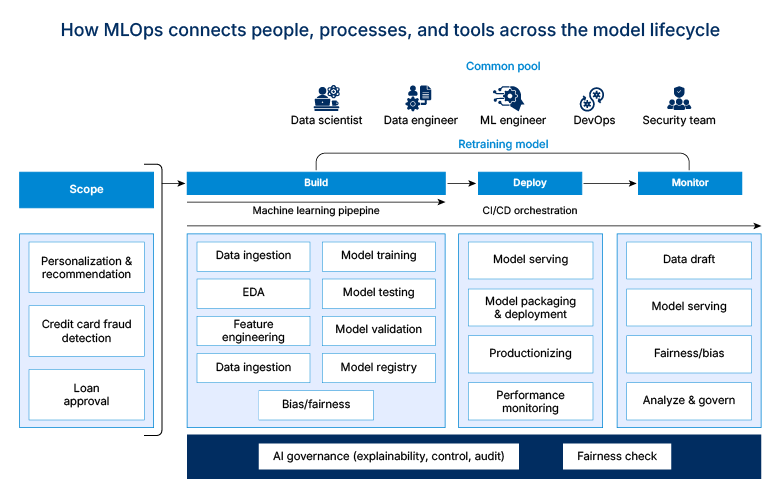

Visualizing the MLOps lifecycle:

This visual outlines the key stages of the MLOps process, highlighting how data scientists, ML engineers, and operations teams collaborate to build, deploy, and maintain models at scale.

From problem definition and data preparation to deployment, monitoring, and retraining, it shows how MLOps brings structure and accountability to machine learning operations.

How do AIOps and MLOps operate on different layers?

To understand how AIOps and MLOps operate on different layers, it’s useful to break down their roles in driving action, from recognizing an issue or opportunity to making a meaningful business decision.

| Functional Layer | AIOps (Incident management) | MLOps (Model management) |

|---|---|---|

| Triggering event | System alert, performance drop, anomaly in logs or metrics | Business problem, data-driven hypothesis, or new use case for machine learning |

| Detection and diagnosis | Real-time analysis of logs and signals, root cause identification | Data exploration, model training and validation, feature engineering |

| Action initiation | Automated remediation or alert routing based on predefined workflows | Model deployment through CI/CD pipelines, API integration with business systems |

| Continuous feedback | Monitors resolution success and alert recurrence to adjust thresholds and rules | Monitors prediction performance, triggers retraining if accuracy drops or data drifts |

Each discipline’s focus complements the other — AIOps ensures reliable, responsive systems, while MLOps powers intelligent, learning-based decision-making aligned with business outcomes.

6 benefits of AIOps and MLOps

1. Smarter operations and faster incident response:

When something starts to go wrong in a system, timing matters. AIOps helps IT teams catch unusual activity early so they can step in before it causes a bigger problem. This kind of early action helps reduce downtime and makes systems easier to manage in the long run.

2. Consistent model performance and reliability:

MLOps ensures that machine learning models continue to deliver accurate results through regular monitoring, automated retraining, and performance feedback loops—keeping predictions dependable even as data evolves.

3. Operational efficiency and team focus:

By taking care of repetitive tasks, AIOps gives engineers and IT teams room to focus on deeper system improvements. It reduces the everyday noise so teams can use their time where it matters most.

4. Faster deployment and scale:

MLOps makes it easier to go from idea to implementation. With automated processes and well-defined workflows, teams can move models into production quickly and roll them out with greater confidence.

5. Stronger governance and compliance:

In environments where oversight matters, knowing how systems behave and who made changes becomes essential. AIOps and MLOps help teams maintain better records, making it easier to stay transparent, support audits, and follow industry rules with confidence.

6. Improved collaboration and system integration:

When automation and feedback loops are built into the process, AIOps and MLOps help development, operations, and data teams stay more connected. That shared view of performance and progress leads to stronger decisions and fewer silos.

Use cases of AIOps and MLOps:

Use cases of AIOps

- Monitoring distributed systems: Aggregates logs and metrics from across environments to detect irregular behavior in real time.

- Resolving outages: Helps identify root causes faster through automated correlation and alerting.

- Correlating event alerts: Reduces noise by grouping related alerts into single actionable items.

- Automating ticket generation: Streamlines incident resolution by creating and assigning tickets through integrated workflows.

Use cases of MLOPs:

- Real-time fraud detection: Monitors patterns in financial transactions as they happen, helping teams catch unusual activity before damage occurs.

- Personalized recommendation engines: Learns from what users browse and buy, then surfaces content or products they’re most likely to find relevant.

- Dynamic pricing models: Uses current market signals, inventory levels, and demand shifts to update pricing in real time.

- Predictive maintenance: Tracks equipment performance over time to catch signs of wear and failure before it disrupts operations.

AIOps and MLOps tools

Below is a side-by-side look at some of the most popular tools used in AIOps and MLOps today:

| Category | AIOps tools | MLOps tools |

|---|---|---|

| Monitoring and automation | Splunk: Offers advanced observability and predictive analytics for IT operations. | MLflow: Supports end-to-end ML lifecycle from experimentation to deployment. |

| Event correlation | Moogsoft: Specializes in noise reduction and automated incident response. | Kubeflow: Helps manage complex ML pipelines on Kubernetes infrastructure. |

| Performance and integration | Dynatrace: Provides full-stack monitoring with AI-driven root cause detection. | Amazon SageMaker: A full suite for building, training, and deploying ML models. |

| Team enablement | ServiceNow + PagerDuty: Improve collaboration and response time for Ops teams. | Azure ML + Google AI Platform: Enables scalable, compliant ML workflows across teams. |

Whether you’re exploring MLOps vs AIOps tools or planning to integrate both, it helps to start with clear goals tied to your infrastructure and AI strategy.

Understanding how AI/ML Ops strategies apply in real-world scenarios starts with the right groundwork.

Best practices for real-world adoption

Bringing AIOps and MLOps into your organization takes more than selecting the right tools. It calls for a clear strategy, alignment of priorities, and close coordination between technical leads and business teams. Below is a practical checklist that can help guide adoption on both fronts:

AIOps checklist:

- Ensure access to reliable, high-volume operational data from all key sources

- Start with high-impact use cases to demonstrate quick wins

- Incorporate automation gradually and monitor its effectiveness

- Align stakeholders across IT, network, and security departments to streamline decision-making

- Establish feedback loops to continuously refine anomaly detection and response mechanisms

MLOps checklist:

- Set clear KPIs tied to business goals for each ML project

- Standardize model development and deployment workflows

- Monitor models post-deployment for accuracy, drift, and ethical impact

- Maintain proper model versioning and traceability for audits and reusability

- Use CI/CD pipelines to reduce manual overhead and improve release cycles

- Define clear business objectives aligned with model outcomes

- Implement rigorous governance and version control

- Ensure continuous model performance monitoring and retraining cycles

- Automate pipelines to consistently deploy updates and improvements

When to use which – or both

Choosing between AIOps and MLOps depends on your organization’s core focus and operational maturity. Use this quick MLOps vs AIOps overview to decide what fits your needs best:

Use AIOps when:

- Your infrastructure is experiencing frequent outages

- You need quicker incident detection and automated remediation

- Stability and uptime are a top operational priority

Use MLOps when:

- You are deploying multiple ML models that need version control and monitoring

- Accuracy, retraining, and model governance are key to success

- AI/ML solutions are becoming central to your business strategy

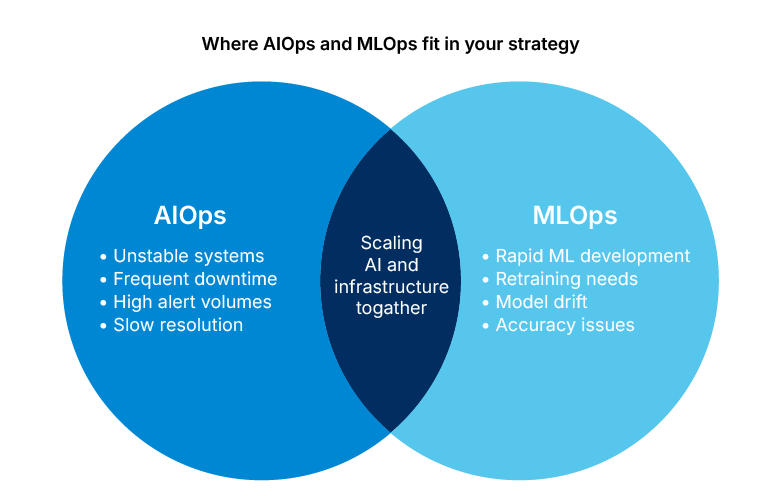

Use both when:

- You are scaling both infrastructure and AI initiatives simultaneously.

- System reliability and ML performance are both mission critical.

The diagram below offers a quick visual guide to help identify which challenges each discipline best addresses and where they might overlap in practice:

As the diagram shows, AIOps shines in environments where IT reliability and response time are critical, while MLOps becomes essential when deploying and managing machine learning models at scale. When both infrastructure and AI capabilities are scaling together, combining AIOps and MLOps is often the smartest path forward.

Align AIOps and MLOps to drive business value

Understanding what AIOps and MLOps bring to the table is only the beginning. The next step is to apply these processes to the real challenges your organization faces. If machine learning is a focus for your business, then putting a solid MLOps framework in place will be key to seeing long-term value from those efforts.

At Softweb Solutions, we specialize in helping businesses navigate the complexities of deploying and managing machine learning operations effectively. Whether you’re exploring operational frameworks or looking for expert-led ML consulting, our team is here to help you align strategy, tools, and execution, ensuring your ML investments deliver ongoing value. Take the next step toward reliable and scalable AI solutions. Connect with us today and transform your AI goals into tangible success.