The data revolution has transformed research and development. Machine learning (ML) has become an invaluable tool, sifting through mountains of information to unearth valuable insights. However, traditional ML can hit a wall: limited data quality and the challenge of integrating human expertise often slow progress. The key to resolving this lies in integrating domain knowledge into models with generative ability.

In this article, we’ll explain what generative AI models are, how they are trained, and give an insightful look at the most common generative AI models today.

Before we do that, let’s take a look at the whopping figures revolving around generative AI.

Gartner predictions:

- By 2025, generative AI will be producing 10 percent of all data (now it’s less than 1 percent) with 20 percent of all test data for consumer-facing use cases.

- By 2025, generative AI will be used by 50 percent of drug discovery and development initiatives.

- By 2027, 30 percent of manufacturers will use generative AI to enhance their product development effectiveness.

How do generative AI models work?

Generative AI models are big data-driven models that power up the emerging artificial intelligence that can create content. But how do generative models work?

Generative AI models are trained to become highly skilled pattern recognizers. Unlike traditional AI models that require extensive labeled data, generative models leverage unsupervised or semi-supervised learning techniques. This allows them to analyze vast amounts of uncategorized data from diverse sources like the internet, books, and image collections.

Through this training, the models identify recurring patterns and relationships within the data. This empowers them to generate entirely new content that mimics these patterns, making it appear deceptively human-like. The secret behind this remarkable ability lies in the model’s architecture. These models are built with interconnected layers that resemble the connections between neurons. When combined with massive datasets, powerful algorithms, and frequent updates, this neural network design allows the models to continuously learn and improve over time. In essence, generative AI models become adept at replicating human-like content by learning the underlying patterns present in the data they are trained on.

Among the different types of generative AI models, there are text-to-image generators, text-to-text-generators, image-to-image generators, and image-to-text generators. Following is the result of a text-to-image generator, after giving the AI prompt – a text description.

For more information about how generative AI is used in business, check out the guide: Generative AI for businesses

How are generative AI models trained?

Every generative AI model trains differently, depending upon the model you are training.

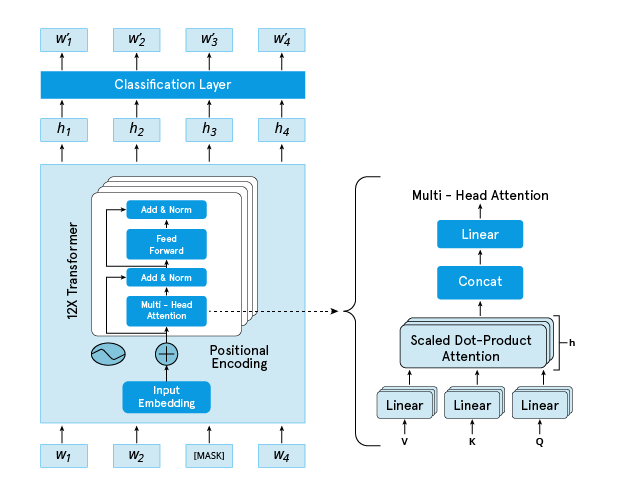

Transformer-based models: These models leverage massive neural networks to identify and remember relationships and patterns within data sequences. Training involves exposing the model to vast datasets from various sources like text, code, images, and videos. The model learns to contextualize this data and prioritize crucial elements based on the training context. Essentially, it picks the most logical piece of data to generate the next step in a sequence. Encoders and/or decoders then translate the learned patterns into outputs based on user prompts.

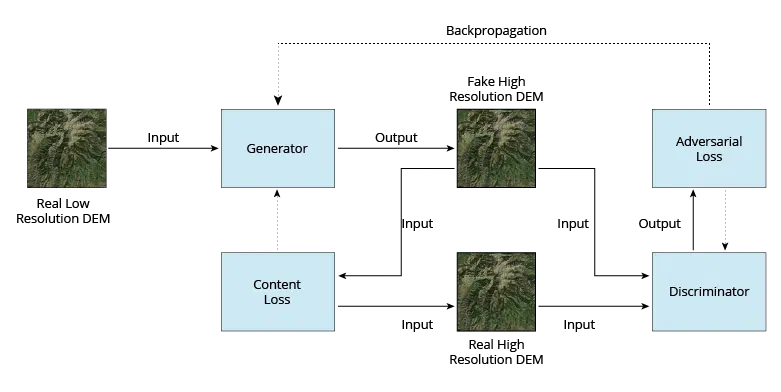

Diffusion models: Training diffusion models involve two stages: forward diffusion and reverse diffusion. In forward diffusion, noise is progressively added to training data, and the model is trained to generate outputs using this noisy data. This step helps the model learn various possibilities for a given input. During reverse diffusion, the noise is gradually removed, prompting the model to focus on underlying data patterns and generate outputs that closely resemble the original data.

Exploring generative AI model types

Numerous GenAI models exist, with new variations emerging from ongoing research. It’s important to note that some models can fall under multiple categories. For example, the latest updates to ChatGPT make it a transformer-based-model, a large language model, and a multimodal model.

Key model types:

- (GANs): Excel at replicating images and generating synthetic data.

- Transformer-based models: Well-suited for text generation and content completion tasks. Examples include GPT and BERT models.

- Diffusion models: Ideal for image generation and video/image synthesis.

- Variational autoencoders (VAEs): Effective for creating realistic images, audio, and videos, often used for synthetic data generation.

- Unimodal vs. Multimodal models: Unimodal models accept only one data format as input, while multimodal models can handle various input types. For instance, GPT-4 can process both text and images.

- Large language models (LLMs): Currently the most prominent type, LLMs generate and complete written content at scale.

- Neural radiance fields (NeRFs): A developing technology that generates 3D imagery from 2D image inputs.

Generative vs. Other AI models

While GenAI excels at creating new content, other AI models serve distinct purposes:

- Discriminative AI: This type of AI focuses on analyzing existing data to categorize it into predefined classes. It’s like a sorting tool, trained through supervised learning to recognize patterns and assign labels accordingly. For instance, a discriminative AI model might classify proteins based on their structure.

- Predictive AI: Here, the AI analyzes existing data to make informed forecasts about future events or trends. It’s like a fortune teller, leveraging past information to predict potential outcomes. An example would be a predictive AI model that forecasts the next maintenance due date before a machine breaks down.

Benefits of generative AI models

Generative AI models offer numerous advantages with significant implications for the future of AI, particularly in data augmentation and natural language processing:

- Data augmentation: Generative models can create synthetic data to augment existing datasets. This is particularly beneficial when obtaining sufficient real-world labeled data is difficult, ultimately aiding the training of other machine learning models.

- Natural language understanding and generation: GenAI models can power AI chatbots and virtual assistants capable of comprehending and generating human-like responses in natural language. They can also produce human-quality text for content creation, including articles, stories, and more.

- Creative applications: Generative AI models can be harnessed for creating art, poetry, music, and other artistic works. For instance, OpenAI’s Jukebox, a generative model, can compose music in various styles. They can also be leveraged for content synthesis by generating diverse and creative content, and fostering brainstorming and ideation processes.

- Versatility: Generative AI models can be fine-tuned for various tasks like translation, summarization, and question answering. Additionally, with proper training and fine-tuning, they can be adapted to different domains and industries. For example, the output tone can be adjusted to be serious and formal, or casual and recreational, depending on the model’s configuration. The level of control over the output’s style and mood is remarkably precise.

Various examples of generative AI models

Several generative AI models or foundation models are making waves in various fields. Here are some notable examples:

Large language models (LLMs):

- GPT-X series (OpenAI): A series of increasingly sophisticated models capable of text generation, translation, and code completion. The latest version, GPT-4, underpins tools like ChatGPT.

- Codex (OpenAI): Specializes in generating and completing code based on natural language instructions. It forms the foundation for functionalities like GitHub Copilot.

- LaMDA (Google): A transformer-based model designed for natural and engaging conversation.

- PaLM (Google): A powerful LLM capable of generating text and code in multiple languages. PaLM 2 drives functionalities within Google Bard.

- AlphaCode (DeepMind): A developer tool that translates natural language descriptions into working code.

- BLOOM (Hugging Face): An autoregressive LLM skilled at completing missing text or code segments in various languages.

- LLaMA (Meta): A more accessible LLM option, aiming to make GenAI technology more widely available.

Image generation:

- Stable Diffusion (Stability AI): A popular diffusion model adept at generating images from textual descriptions.

- Midjourney: Similar to stable diffusion, Midjourney excels at creating visuals based on user-provided text prompts.

This list showcases just a few examples of GenAI models impacting various industries. As the field progresses, we can expect even more innovative applications to emerge.

What can generative AI models do?

Generative AI models support multiple use cases, allowing anyone to finish personal tasks and for business purposes when trained thoroughly and given apt prompts. These are the tasks that can be handled using the models.

| Visual | Language | Auditory |

| Video generation | Code documentation | Music generation |

| Image generation | Generate synthetic data | Voice synthesis |

| Procedural generation | Code generation | Voice cloning |

| Synthetic media | Generate and complete text | |

| 3D models | Design protein and drug descriptions | |

| Optimize imagery for healthcare diagnostics | Supplement customer support experience | |

| Create storytelling and video game experiences | Answer questions and support research |

Fine-tuning the future: The assessment of GenAI models

Businesses are eager to integrate AI, but building models from scratch can be a time-consuming and resource-intensive process. Foundation models offer a powerful solution. These pre-trained models act as a springboard, allowing businesses to jumpstart their AI/ML journey.

The key to maximizing their impact lies in selecting the optimal deployment option. Several factors influence this decision, including cost, development effort, data privacy concerns, intellectual property rights, and security considerations. Just like choosing the right software solution for a specific task, the ideal deployment option depends on your unique needs.

The beauty lies in the flexibility. Businesses can utilize a single deployment option or a combination based on their specific use case. By leveraging foundation models, businesses can significantly reduce the need for data labeling, streamline the entire AI development process, and ultimately broaden the spectrum of business-critical tasks.