The emergence of large language models (LLMs) has opened a world of possibilities in the field of natural language processing (NLP), driven by their potent generative AI capabilities. However, it’s crucial to understand that LLMs fundamentally operate as machine learning models, with the effectiveness of their applications contingent on the accuracy of their predictions. This leads to an exploration of how LLMs achieve precise predictions based on their knowledge sources.

Two prominent NLP techniques, retrieval augmented generation (RAG) and fine tuning, have gained significant attention for addressing complex challenges and finding applications in various industries. In this blog, we will get a detailed understanding of fine-tuning and RAG.

LLM fine-tuning

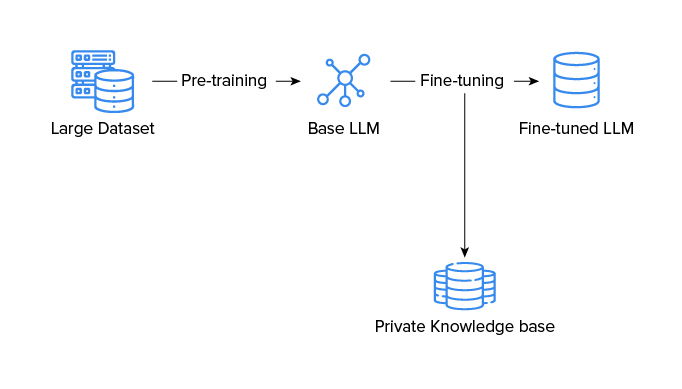

Fine-tuning is a technique that entails retraining a pre-trained LLM using a dataset tailored to a specific task. This process enables the LLM to acquire task-specific patterns and relationships. While it is a potent approach, fine-tuning can be resource-intensive and costly, particularly for tasks of considerable complexity and scale.

Benefits of fine-tuning LLMs

Enhanced machine translation: The process of fine-tuning empowers LLMs to excel in the domain of machine translation. By fine-tuning, LLMs can precisely and effectively translate text from one language into another. For instance, a fine-tuned LLM can be deployed to proficiently translate news articles from English to Spanish, ensuring accurate and coherent output.

Text classification proficiency: Fine-tuning becomes invaluable in tasks involving text classification, such as discerning spam from legitimate messages or determining the sentiment of a text (positive or negative). A fine-tuned LLM can adeptly categorize content into different classes. For example, it can be leveraged to classify customer reviews of a product, assisting in the identification of positive or negative feedback.

Natural language inference mastery: Fine-tuning equips LLMs with the ability to ascertain the validity of a hypothesis based on a given premise. In practice, it enables LLMs to determine the truth or falsity of statements. For instance, a fine-tuned LLM can evaluate the veracity of the claim “All dogs are mammals” when presented with the premise “Spot is a dog.”

What is retrieval augmented generation?

Retrieval-Augmented Generation (RAG) is a more recent technique that leverages the strengths of LLMs while benefiting from the precision of retrieval systems. The process involves an initial step where RAG retrieves related documents from a knowledge base, such as a Wikipedia article or a collection of product reviews. Subsequently, these retrieved documents serve as the foundation upon which the LLM generates a response. This approach has several advantages over fine-tuning:

- It is more efficient, as it does not require the LLM to be retrained on a large dataset.

- It is more transparent, as it allows users to see the exact text that was used to generate the response.

- It is more effective at avoiding hallucinations, as the LLM is grounded in real-world data.

Benefits of RAG

Enhanced question answering: RAG empowers LLMs to excel in question answering tasks by enabling them to provide more precise and insightful responses. For instance, a question answering system based on RAG can adeptly address inquiries related to specific products or services. It achieves this by extracting and condensing pertinent information from a database of reviews, ensuring more accurate and informative answers.

Elevated creative text generation: RAG can be an asset in the realm of creative text generation. It enables LLMs to craft imaginative and captivating pieces of text in diverse formats such as poems, code, scripts and musical compositions.

Improved summarization: When it comes to summarizing lengthy documents, RAG can significantly enhance the proficiency of LLMs. A RAG-based summarization system is adept at producing more precise and informative summaries. For instance, if tasked with summarizing a news article, it can swiftly identify and condense the most pertinent sentences from the article, ensuring a concise yet informative summary.

Also read: How generative AI applications are revolutionizing the business world

RAG or fine-tuning: Which one to choose?

RAG and fine-tuning are two powerful techniques for improving the performance of LLMs. The best approach for a particular task will depend on several factors, such as the availability of labeled data, the type of task, and the desired performance. RAG is a good choice for generating text that is similar to existing text, such as customer support responses or product descriptions. It is also a good choice for generating text that needs to be up-to-date, such as news articles or financial reports.

Fine-tuning is a good choice for generating text that is tailored to a specific audience, such as marketing copy or technical documentation. It is also a good choice for generating text that needs to be creative or original, such as poems or novels.

Softweb Solutions is a leading provider of LLM solutions for businesses. We have a team of experienced LLM engineers and scientists who can help you choose the right approach for your business and implement a solution that meets your specific requirements. To know more about how LLM solutions can benefit your business, connect with our experts!