An F1 car navigating a sharp turn, a Le Mans racer enduring 24 hours of peak performance, or a high-traffic intersection flowing smoothly—each demands precision, endurance, and efficiency. Just as these systems must operate in sync to maintain speed, data pipelines must move without friction to ensure timely insights and seamless operations.

“The goal is to turn data into information, and information into insight.” – Carly Fiorina, former CEO of Hewlett-Packard

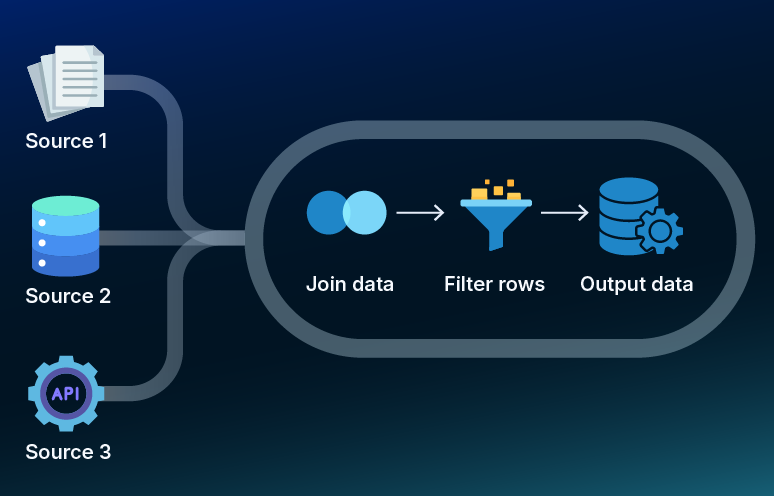

But insights are only valuable if they’re timely and actionable. Data, like race strategy, must be processed fast, accurately, and efficiently. Without a well-structured pipeline, even the most critical data can become a missed opportunity. That’s where expert data engineering services come into play—ensuring that data moves seamlessly, stays reliable under pressure, and delivers insights when they matter most. By focusing on key performance metrics, they help optimize pipelines for speed, accuracy, and resilience, ensuring data-driven success.

In this blog, we’ll explore the five key metrics that dictate the success of data pipelines—illustrated through the lens of high-speed performance.

Fastened your seatbelts? Great! Now let’s accelerate into—

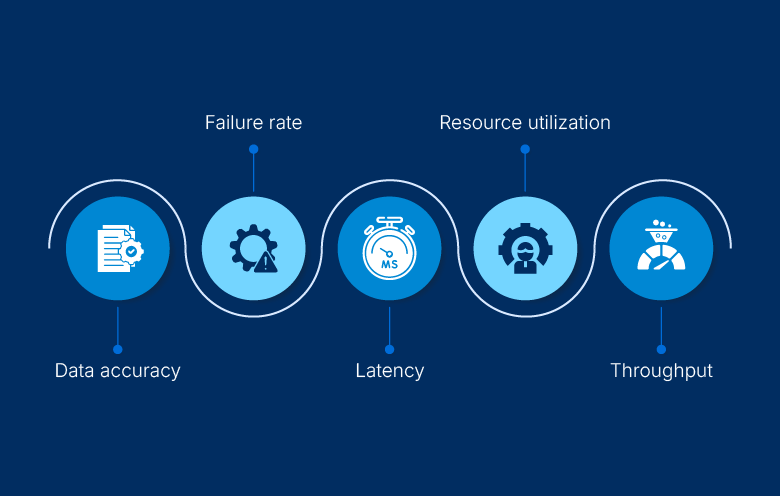

Understanding key metrics for data pipeline integrations and performance: The speed & precision approach

1. Data accuracy: Precision steering for success

In F1, precision is everything. The difference between a well-executed turn and a disastrous crash often comes down to millimeters. Similarly, data accuracy is the backbone of a well-functioning pipeline.

Challenges:

- Inaccurate or inconsistent data leads to poor insights

- Misinformed decisions

- Operational inefficiencies

Solutions:

- Implement robust validation mechanisms

- Apply deduplication strategies

- Continuously monitor data integrity

Why it matters:

High data accuracy ensures reliable analytics, optimized operations, and better strategic decisions.

2. Failure rate: Avoiding technical DNFs (did not finish)

Even the best race cars can suffer mechanical failures, forcing them out of competition. Similarly, unexpected data pipeline breakdowns can bring operations to a halt, causing disruptions and delays.

Challenges:

- Sudden pipeline failures disrupt workflows

- Schema mismatches create data inconsistencies

- Job and integration breakdowns impact business continuity

Solutions:

- Implement predictive monitoring and logging

- Set up automated alerts for early issue detection

- Use self-healing mechanisms to minimize downtime

Why it matters:

Reducing failure rates ensures operational resilience, maintains seamless data flow, and prevents costly disruptions.

3. Latency: Avoiding pit stop delays

In racing, a slow pit stop can cost the race. Similarly, high latency in data pipelines disrupts real-time analytics and decision-making.

Challenges:

- Slow data processing causes delays in real-time analytics

- Affects timely decision-making

- Hinders business intelligence

Solutions:

- Optimize data pipelines using caching

- Implement parallel processing

- Leverage event-driven architectures

Why it matters:

Low latency ensures real-time insights, faster response times, and an agile business strategy.

4. Resource utilization: Efficient fuel and tire management

Racing teams carefully manage fuel, tire wear, and aerodynamics to maximize performance and efficiency. Similarly, the implementation of data engineering ensures that data pipelines manage resources efficiently, avoiding waste and overspending.

Challenges:

- Inefficient CPU, memory, and storage usage leads to overspending

- Underutilized resources reduce performance and slow down processing

- Lack of optimization affects scalability and responsiveness

Solutions:

- Use auto-scaling for demand-based resource allocation

- Implement workload distribution to optimize processing power

- Reduce costs with efficient data processing techniques

Why it matters:

Optimized resource utilization reduces operational expenses, enhances performance, and supports sustainable scalability.

5. Throughput: Maximum speed on the straightaways

Race cars need top speed on straights to stay competitive. Similarly, high throughput in data pipelines ensures seamless large-scale processing.

- Large volumes of data create processing bottlenecks

- Slows down analytics and reporting

- Reduces system efficiency

Solutions:

- Optimize infrastructure with scalable cloud solutions

- Use load balancing

- Implement efficient partitioning

Why it matters:

High throughput enables efficient large-scale data processing, ensuring seamless data flow, optimized storage, retrieval, and faster business insights with data warehouse services.

Suggested: Explore the Top 10 Data Trends shaping the future of data-driven innovation

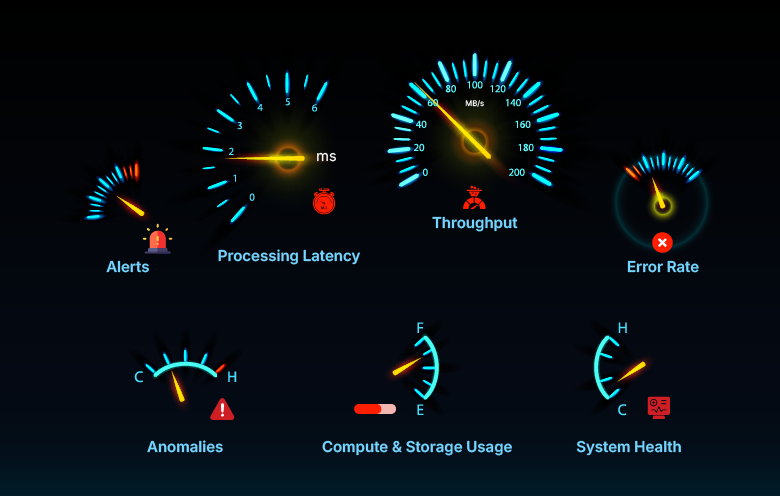

How to optimize your data pipeline performance

To ensure a podium finish, race teams continuously tweak their strategies based on real-time data. The same applies to data engineering. Here are some ways to keep your data pipelines in top gear:

- Data quality checks: Implement robust data validation techniques to ensure accuracy.

- Stream processing: Reduce latency by leveraging real-time data processing instead of batch processing.

- Scalability solutions: Use cloud-native architectures, data warehouse services, and distributed processing to handle peak loads efficiently.

- Automated monitoring: Deploy AI-driven monitoring tools to detect failures before they impact operations.

- Cost optimization: Use intelligent workload balancing and storage tiering to optimize resource utilization.

A data engineer spends roughly 80% of their time updating, maintaining, and guaranteeing the integrity of the data pipeline. – MarketsandMarkets

Chequered flag: Driving data through efficient pipelines with precision

Just like in racing, success in data pipelines isn’t just about speed—it’s about precision, efficiency, and reliability. Organizations that track and optimize key performance metrics ensure their data strategy stays ahead of the competition.

At Softweb Solutions, we specialize in fine-tuning data pipelines to deliver speed, accuracy, and scalability—ensuring that your business remains in pole position. Ready to accelerate your data pipeline performance? Let’s race to the future of data engineering together—connect with us to get started!