With the increasing number of vehicles, there is a growing need for advanced technology to manage and monitor traffic. Traditional manual methods are impractical and prone to errors. Machine learning algorithms struggle with real-time data processing. So, an automatic number plate recognition system (ANPR) that accurately identifies number plates is in demand.

In this blog, we will cover the following:

- What are some exciting applications of ANPR?

- What is ANPR?

- Why should you implement ANPR?

- How does automatic number plate recognition work?

Need more reasons for implementing the ANPR system, or what are some exciting applications of ANPR?

ANPR systems are essential in traffic monitoring and are critical in other systems like parking management and toll collection.

Here are some real-world case studies showcasing the applications of ANPR.

Law enforcement: Recently, the LA Police Department reported that its ANPR system has helped recover over 34,000 stolen vehicles and make 25,000+ arrests since its implementation. (Source: LAPD)

Parking management: A parking management system using ANPR technology cut parking time from 13 to 2.5 minutes in a University of Alberta case study. (Source: University of Alberta)

Toll collection: Using the ANPR system leads to an 11% increase in revenue for tolling agencies. (Source: International Bridge, Tunnel and Turnpike Association)

Border control: ANPR system implementation reduced illegal border crossings by 25% at the EU’s external borders from 2010 to 2017. (Source: European Commission)

Retail: A UK-based retailer, Tesco, implemented an ANPR-based parking system. It reduced parking violations by 60% and increased space availability by 50%. (Source: Parking Network)

These case studies demonstrate ANPR’s effectiveness in improving efficiency and safety in industries.

Suggested: How do AI-powered apps use computer vision to recognize and identify images?

What is ANPR?

ANPR was invented in 1976 at the Police Scientific Development Branch in the UK. The system uses optical character recognition (OCR) technology to read vehicle license plates. The system compares them against a database of vehicles of interest. ANPR has various uses, such as traffic monitoring, law enforcement, toll collection, and parking management.

ANPR systems typically have

- Cameras,

- Software for character recognition,

- Databases for storing and searching data, and

- Hardware for processing and transmitting data.

The system has become advanced with computer vision and deep learning. It enables the system to read license plates in any condition. In all, ANPR enhances public safety and traffic management.

Suggested: How computer vision enhances public safety through face recognition?

Why should you implement ANPR?

Approximately

People die due to traffic violations. At the same time, nearly 20–50 million non-fatal injuries occur due to traffic crashes. Like PUBG’s playtime restrictions, what if we could control the speeding behavior of drivers? What if there is an automatic cut-off in speed when it crosses a certain limit? If this happens, we can reduce these numbers by half.

Undoubtedly, ANPR doesn’t directly allow to control the speeding behavior of drivers. However, it helps in efficiently identifying the vehicle that is speeding. This, in turn, enables the authorities to act quickly and avoid such circumstances in the future.

The ANPR system uses image processing and optical character recognition to identify and verify vehicle license plates. Today, ANPR has become integral to intelligent transportation systems affecting smart parking, law enforcement, tolling and traffic monitoring applications. Our computer vision AI services’ capabilities will help you build an ANPR system.

Suggested: Can computer vision save industries from losing $532,000/hour?

How does automatic number plate recognition work?

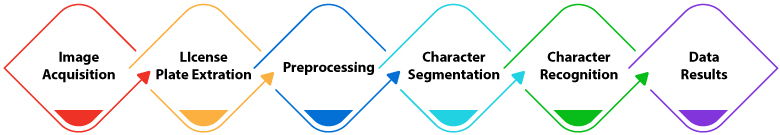

Broadly, the system uses the following three steps:

Image extraction: Takes an image of a vehicle as input

Image processing: The process from a whole image to the plate region extraction

Image recognition: Character segmentation and character recognition of the number plate

This how-to guide describes these steps in detail. We will show code snippets with each section to demonstrate the functioning of ANPR technology for this how-to guide.

Let’s look at how multiple algorithms convert alphanumeric images into text format and display them.

Red, Green, Blue to grayscale conversion

Grayscaling converts an image from other color spaces – such as RGB, CMYK, or HSV – to grayscale. We used the OpenCV module’s functions like “cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)” in Python to convert RGB to Grayscale.

gray_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

cv2.imshow("grayed image", gray_image)

cv2.waitKey(0)

Median filtering

High-speed, or even fixed image capturing comes with a great deal of noise. Gray processing has significant limitations in removing noise. Median filters are used to remove noise from an image. This is a crucial step because it directly affects the system’s recognition rate in license plate recognition.

gray_image = cv2.bilateralFilter(gray_image, 11, 17, 17)

cv2.imshow("smoothened image", gray_image)

cv2.waitKey(0)

Edge detection and dilation of BGM (binary gradient masking)

Edge detection reduces the amount of data in an image while preserving the structural properties for further image analysis. Edge detection finds sharp discontinuities in images. This is the most widely used method for detecting meaningful discontinuities in intensity values.

edged = cv2.Canny(gray_image, 30, 200)

cv2.imshow("edged image", edged)

cv2.waitKey(0)

Plate region extraction

We first determined an image’s row and column values to extract regions of interest. Following it, we modified the image using r/3:r and saved the objects. The saved objects are then used in a dilation, the morphological closing operation, followed by erosion. That’s how we identified the coordinates of the license plate.

x,y,w,h = cv2.boundingRect(c)

new_img=image[y:y+h,x:x+w]

cv2.imwrite('./'+str(i)+'.png',new_img)

i+=1

break

Computer vision in today’s world

What impact do computer vision applications have on the corporate world – and what’s in store for the future? Here’s the blog describing computer vision trends in 2023 that modern enterprises look to adapt.

Morphological operations

Morphology is a large range of image processing operations focused on shapes. Morphological tasks apply an organizing component to an input picture. Here, the most fundamental tasks are dilation and erosion.

Morphology is a broad category of image processing operations centered on shapes and structures inside images. These operations transform images in two ways:

- Increase or decrease the objects’ size

- Open or close the gaps between objects

Amongst different morphological tasks – dilation, erosion, opening, closing, morphological gradient, white hat and black hat – dilation and erosion are fundamental.

“erosion = cv2.erode(img,kernel,iterations = 1)” is the erosion command, while “dilation = cv2.dilate(img,kernel,iterations = 1)” is the dilation command. The open morphological operation is an erosion followed by a dilation operation for the same structuring element. While the close morphological operation is a dilation followed by an erosion operation for the same structuring element.

Character segmentation

Character segmentation means decomposing an image into sub-images of individual characters. Segmentation processes divide an image into distinct parts, each containing one character and capable of being extracted for further processing.

There are two approaches to image segmentation: contour-based and region-based. We used contour-based segmentation in this simulation, which is based on the gradient-based segmentation method. It looks for edges and boundaries based on high gradient magnitudes. Character segmentation employs the bounding box technique.

The bounding box technique measures the properties of an image region. A bounding box is drawn around each character and number on a number plate. The ANPR system then extracts each character and number.

cnts,new = cv2.findContours(edged.copy(), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

image1=image.copy()

cv2.drawContours(image1,cnts,-1,(0,255,0),3)

cv2.imshow("contours",image1)

cv2.waitKey(0)

cnts = sorted(cnts, key = cv2.contourArea, reverse = True) [:30]

screenCnt = None

image2 = image.copy()

cv2.drawContours(image2,cnts,-1,(0,255,0),3)

cv2.imshow("Top 30 contours",image2)

cv2.waitKey(0)

i=7

for c in cnts:

perimeter = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.018 * perimeter, True) if len(approx) == 4:

screenCnt = approx

Character recognition

Character recognition is the mechanical or electronic conversion of images of typed, handwritten, or printed text into text that a machine can understand. Character recognition software scans character segmented images. Thus, it separates images in the light and dark zone text and determines the letters to represent. That’s how the character recognition technique identifies each character on the license plate.

Python-tesseract is a wrapper for Google’s Tesseract OCR engine. It can read any image types supported by the Pillow and Leptonica imaging libraries. It includes JPEG, PNG, GIF, BMP, TIFF and others, making it usable as a standalone tesseract invocation script. Python-tesseract will print the recognized text rather writing it to a file if used as a script.

cv2.drawContours(image, [screenCnt], -1, (0, 255, 0), 3)

cv2.imshow("image with detected license plate", image)

cv2.waitKey(0)

Cropped_loc = './7.png'

cv2.imshow("cropped", cv2.imread(Cropped_loc))

plate = pytesseract.image_to_string(Cropped_loc, lang='eng')

print("Number plate is:", plate)

cv2.waitKey(0)

cv2.destroyAllWindows()

Suggested: How to design a computer vision system in six easy steps?

Conclusion

ANPR is a crucial AI vision technology. The ANPR systems are widely in use across the world. The use cases of ANPR are wide-ranging. It covers smart parking management, journey time analysis, toll booth records, retail park security and most importantly law enforcement.

It’s no surprise that ANPR has grown in popularity in recent years. The global automatic number plate recognition market is rapidly expanding. Disruptive ANPR projects in logistics, transportation, security and the public sector are common.

If your company wants to take advantage of this powerful technology, get in touch with us. Our computer vision AI services’ capabilities will help you build an ANPR system.