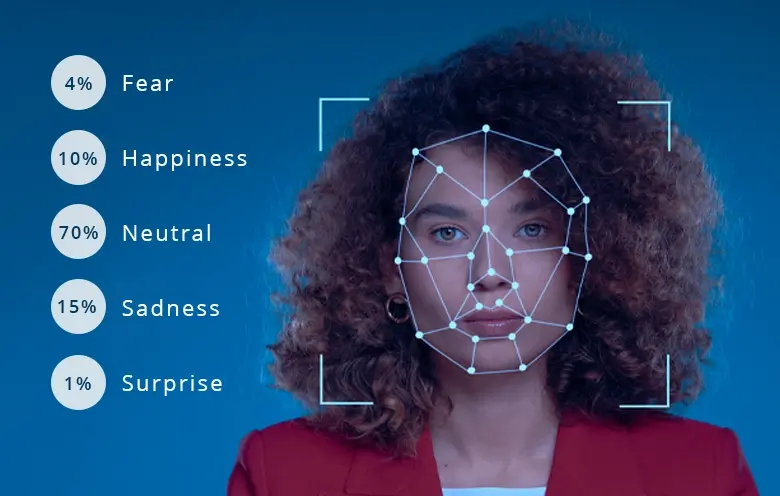

Emotion recognition refers to the process of identifying and categorizing human emotions based on facial expressions. This process takes insights from computer vision, machine learning, and psychology to understand a person’s emotional state.

Understanding emotions was difficult a few years ago. But today, technology has made it possible with the help of artificial intelligence and computer vision. Several organizations are using custom emotion detection software development services to identify sentiments and understand human emotions and interactions. Deployed with facial recognition technology, emotion recognition algorithms have become instrumental for marketing, product development, and surveillance.

In this blog we will explore what emotion recognition is, how does it work, types, how to use Azure cognitive services for emotion recognition, benefits, use case, tools used and challenges.

What is emotion recognition?

Emotion recognition is a technology that uses artificial intelligence and computer vision to identify and categorize human emotions based on facial expressions. By analyzing and interpreting facial expressions and emotions, AI can accurately identify a person’s emotional state. The six emotional states that AI can identify include happiness, anger, surprise, fear, pain and excitement. It is a derivative of computer vision that uses multimodal features like visual data, body language and gestures to assess the emotional state of the person.

For instance: Finance organizations can understand customers’ sentiments by analyzing speech and facial expressions to offer personalized service.

How does emotion recognition work?

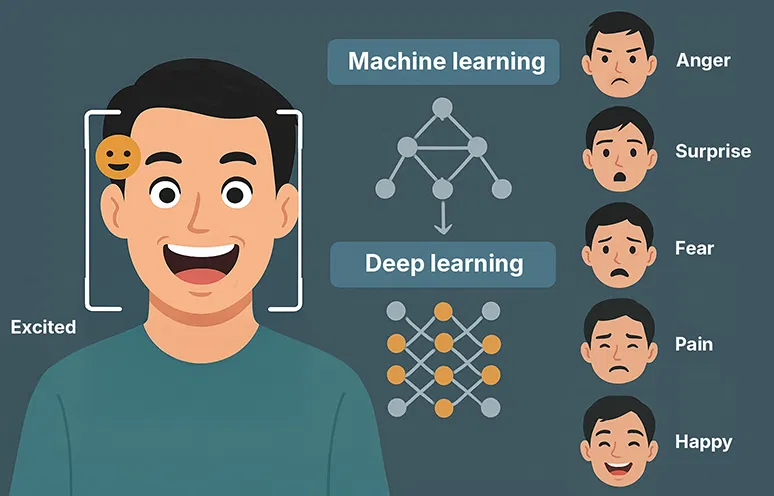

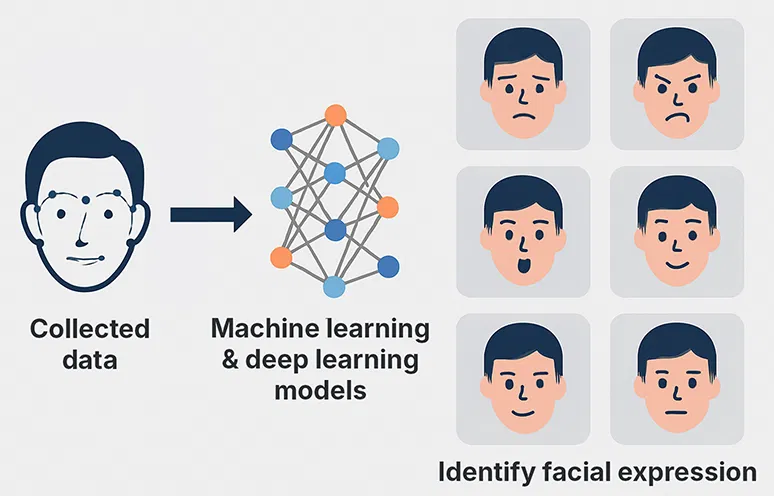

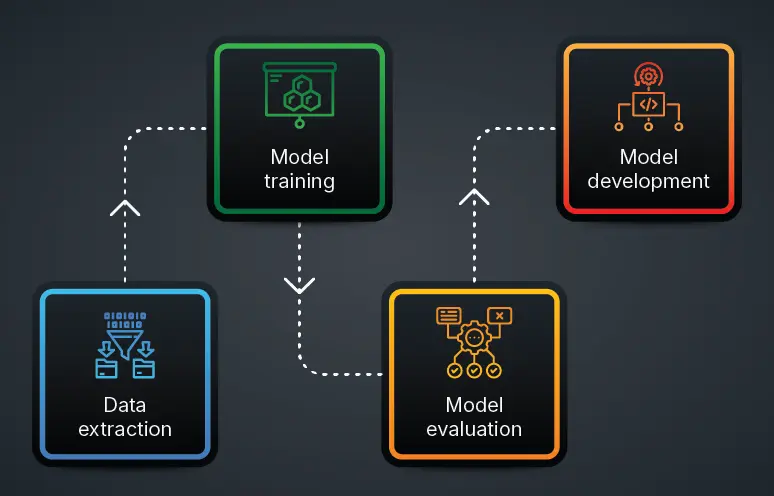

AI-based emotion recognition uses different advanced technologies, including machine learning, deep learning, and computer vision. The basis of emotion recognition with AI is based on three sequential steps:

-

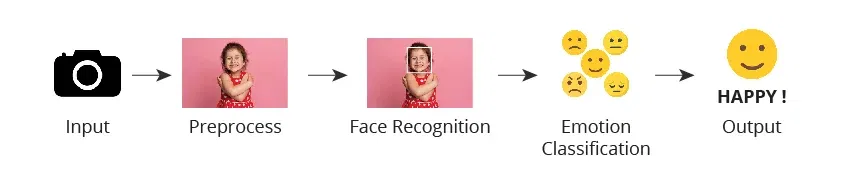

Data collection

Data is collected from sensors and cameras by converting videos into images. Face detection can be a challenging task especially in an uncontrolled environment, where positions keep changing, poses differ and unwanted lighting conditions. Implementing advanced AI algorithm with robust pose estimation and lightning correction ensures face is accurately detected in uncontrolled environment.

-

Image preprocessing

Bounding box coordinates are used to locate the exact face images. Once the faces are identified they are then optimized before they are sent to emotion classifier. This process enhances emotion detection accuracy. The images are preprocessed to illuminate changes like image smoothening, noise reduction, image resizing and cropping.

-

Feature extraction

After preprocessing, relevant features that are vital to identify the emotion are extracted. Sensors collect non-verbal cues by analyzing movement of facial muscle like eyebrows, lip curvature and eye openness to identify emotions. AI algorithm examines pitches and rhythm to identify intensity in a person’s speech to detect emotions.

What are the benefits of human emotion recognition?

Emotion recognition using AI helps machines interpret human behavior. This leads to improved system response and smarter communication. From refining decision-making processes to automating manual analysis, emotion recognition delivers measurable impacts. Let’s explore:

-

Enhanced customer service

AI can help customer support agents to analyze and understand the emotions of their customers. Emotion recognition helps agents make the interaction natural and empathetic. Thus, enabling them to offer personalized support leading to higher customer satisfaction and loyalty.

-

Improved decision making

Organizations can make informed decisions by using emotion recognition technology that understands and interprets the deeper context in human behavior. By understanding emotional cues, businesses can offer personalized response whether in customer service, finance or healthcare. As a result, businesses can make accurate and strategic decisions across platforms.

-

Streamlined operations

Businesses can use emotion recognition to streamline tasks like visitor registration and attendance tracking. It helps the system dynamically adapt to the changing environment without human intervention. This process reduces operational delays and enhances timely responsiveness. Thus, business operation becomes accurate and faster.

-

Behavioral analysis

Emotion recognition technology precisely tracks behavioral patterns and detects deviations in emotional responses. This can be applied in areas like mental health monitoring and consumer behavior analysis. By integrating emotions with actions, organizations gain clearer insights into individual intent. This enables finance and banking organizations to provide customized service and healthcare to offers personalized care.

Develop custom emotion recognition software that understands your users

From concept to deployment, we build custom emotion recognition software to fit your business needs and goals.

How to use Azure AI services for emotion recognition?

Using Azure AI for emotion recognition makes it easier to detect human emotions like happiness, anger, or surprise through facial expressions. With just a few setup steps, you can integrate Azure facial recognition into your system.

Azure facial emotion recognition:

Step 1 – Create Azure Custom Vision resources

1. Go to Azure Portal.

2. Create Custom Vision→ choose both:

- Training resource(for training your model)

- Prediction resource(for running inference)

3. Note down:

- Training Key, Training Endpoint,Prediction Key, Prediction, Endpoint,Prediction Resource ID(GUID)

Step 2 – Prepare training dataset

1. Collect face crops(you can crop manually or via Azure Face Detect API — still allowed for face rectangles, just not emotions).

2. Label each image in categories: happy, sad, angry, neutral, etc.

3. Aim for 200+ images per labelfrom diverse demographics, lighting, and angles.

4. Public datasets:

-

- FER-2013

- AffectNet subset

Step 3 – Train the model in Azure Custom Vision portal

1. Go to Custom Vision portal.

2. Create a Classification project → Multiclass.

3. Upload your labeled face images.

4. Click Train→ choose Quick Training or Advanced.

5. When satisfied with accuracy, click Publish:

-

- Give it a Published Name(e.g., facial-emotions-v1)

- Select your Prediction Resource.

Backend API Integration

We’ll use the Prediction API to classify new images.

Node.js Example:

const express = require("express");

const { PredictionAPIClient } = require("@azure/cognitiveservices-customvision-prediction");

const { ApiKeyCredentials } = require("@azure/ms-rest-js");

const app = express();

app.use(express.json());

const predictionKey = process.env.CV_PRED_KEY;

const predictionEndpoint = process.env.CV_PRED_ENDPOINT; // e.g. https://<region>.api.cognitive.microsoft.com/

const projectId = process.env.CV_PROJECT_ID; // GUID from portal

const publishedName = process.env.CV_PUBLISHED_NAME; // e.g., "facial-emotions-v1"

const predictor = new PredictionAPIClient(

new ApiKeyCredentials({ inHeader: { "Prediction-key": predictionKey } }),

predictionEndpoint

);

app.post("/detect-emotion", async (req, res) => {

try {

const { imageUrl } = req.body;

const result = await predictor.classifyImageUrl(projectId, publishedName, { url: imageUrl });

const top = result.predictions.sort((a,b) => b.probability - a.probability)[0];

res.json({ emotion: top.tagName, confidence: top.probability, all: result.predictions });

} catch (err) {

res.status(500).json({ error: err.message });

}

});

app.listen(3000, () => console.log("Facial Emotion API running on port 3000"));

End-to-End Flow

Step 1 – Image acquisition

- User uploads an image to your blog (via HTML file input / camera capture).

Step 2 – Preprocessing (optional but recommended)

- Use Azure Face Detect APIto locate and crop the face region (faster and more accurate classification).

POST https://<region>.api.cognitive.microsoft.com/face/v1.0/detect

Ocp-Apim-Subscription-Key: <FACE_KEY>

Content-Type: application/json

{ “url”: “<image-url>” }

- Extract bounding box → crop → send to Custom Vision.

Step 3 – Emotion inference

- Backend sends cropped face to Custom Vision Prediction API(code above).

Step 4 – Response parsing

- Receive probabilities for each emotion label.

- Pick top label and confidence.

Step 5 – Display

- Show user-friendly message:

“Detected: Happy (91% confidence)” - Optionally render probability bars for all labels.

Flow:

[User Device]

↓ Upload face image

[Blog Frontend]

↓ POST image URL/file

[Backend API]

↓ Call Azure Face Detect (crop)

↓ Call Azure Custom Vision Prediction

↓ Parse probabilities

[Backend → Frontend]

↓ Display emotion & confidence

Azure Voice emotion recognition:

Building the Voice Emotion Model

1. Train your own model

- Use public datasets (e.g., RAVDESS, CREMA-D)

- Extract audio features (MFCC, Chroma, Spectral Contrast) with librosa

- Train with scikit-learn, TensorFlow, or PyTorch

- Export to ONNX

- Deploy to Azure Machine Learning Endpoint or Azure Container Instance

2. Use a pre-trained emotion model

- Find a suitable pre-trained voice emotion recognition ONNX model

- Deploy it directly in Azure

Python Flask API Example

Here’s a simplified API where:

- User sends .wavor .mp3

- API processes it with a pre-trained model

- Returns predicted emotion & confidence

from flask import Flask, request, jsonify

import librosa

import numpy as np

import joblib

# Load pre-trained emotion model (trained locally & uploaded to Azure)

model = joblib.load("emotion_model.pkl") # Replace with your deployed model

app = Flask(__name__)

def extract_features(file_path):

y, sr = librosa.load(file_path, sr=None)

mfccs = np.mean(librosa.feature.mfcc(y=y, sr=sr, n_mfcc=40).T, axis=0)

return mfccs

@app.route("/detect_voice_emotion", methods=["POST"])

def detect_emotion():

if "file" not in request.files:

return jsonify({"error": "No file uploaded"}), 400

file = request.files["file"]

file_path = f"temp/{file.filename}"

file.save(file_path)

features = extract_features(file_path).reshape(1, -1)

emotion = model.predict(features)[0]

confidence = max(model.predict_proba(features)[0])

return jsonify({"emotion": emotion, "confidence": float(confidence)})

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)Deployment in Azure

1. Create Azure App Service→ Deploy Flask API

2. Store large audio files in Azure Blob Storage if needed

3. Custom Model Hosting:

- Option 1: Model embedded in Flask App (simple)

- Option 2: Model deployed as an Azure Machine Learning Endpoint and Flask API just calls it

4. Call API from blog using JavaScript fetch with multipart/form-data

Calling the API:

async function detectEmotionFromAudio(audioFile) {

let formData = new FormData();

formData.append("file", audioFile);

let response = await fetch("https://<your-app-service>.azurewebsites.net/detect_voice_emotion", {

method: "POST",

body: formData

});

let result = await response.json();

console.log(result);

// Example: { emotion: "Happy", confidence: 0.87 }

}

Flow:

User speaks → Audio recorded in blog/app → Sent to Flask API

↓

Flask API (deployed in Azure App Service)

↓

Azure Speech Service → Extracts speech features (pitch, energy, MFCCs, etc.)

↓

Azure Machine Learning (Custom model) → Predicts emotion (Happy, Sad, Angry, Neutral, etc.)

↓

API returns JSON: { “emotion”: “Happy”, “confidence”: 0.87 }

How does AI interpret emotions from facial, vocal, and gestural cues?

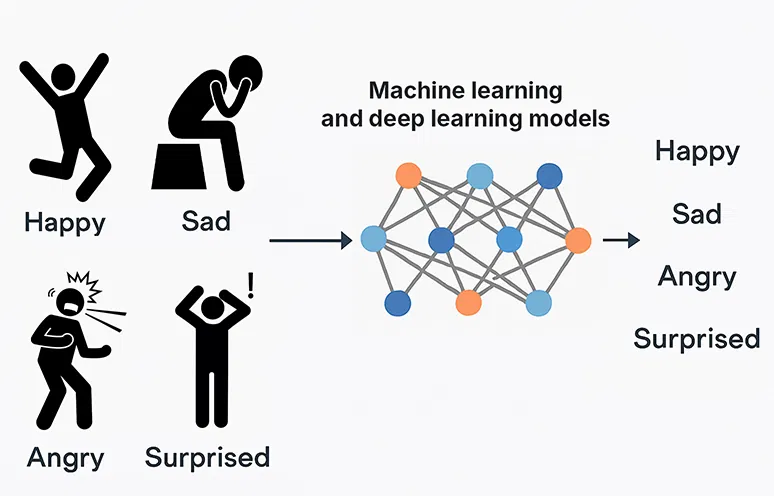

Emotion recognition uses artificial intelligence and machine learning to interpret human emotional cues like voice, gestures and facial expression. This multi-modal approach enhances the accuracy to detect emotion and human-machine interaction.

-

Facial expressions

Facial expressions are vital indicators to identifying the emotion of an individual. AI analyzes photos taken by smart cameras to capture significant and tiny facial movements. Deep learning and Convolutional Neural Networks (CNNs) are AI technologies that are widely used to classify emotions such as fear, happiness, surprise, anger, pain, and excitement. Advanced technology and research are being used to quickly identify hidden expressions and implement them into robots to promote human-like communication.

-

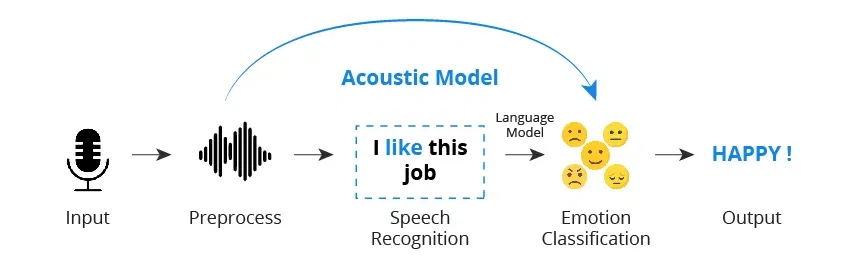

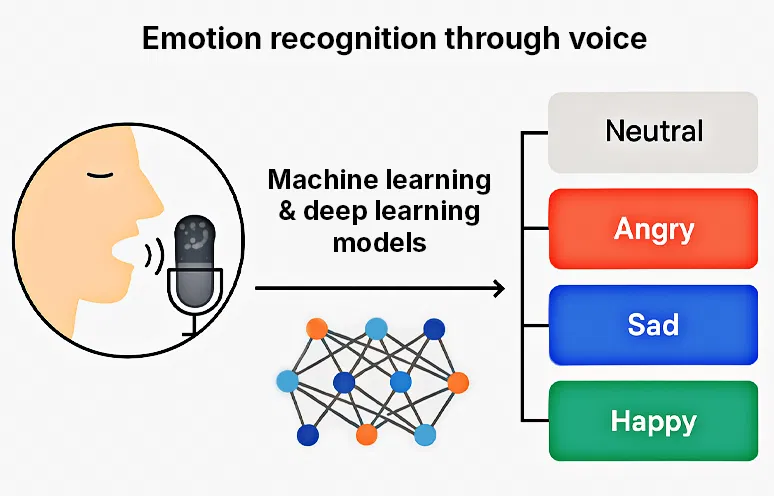

Emotion recognition through voice

AI models recognize emotions from voice using acoustic features such as speech intensity and tone. It uses Natural Language Processing (NLP) and deep learning techniques to interpret emotional cues from audio speech. Changes in speech features can indicate switch in specific emotions. For instance, a raised voice, and fast speech indicate excitement while a raised voice and harsh words might indicate anger. However, adapting to diverse language and speech can be challenging, thus making real-world deployment an ongoing area of study.

-

Gestural emotion recognition

Body movement and gestures reveal the emotional state of an individual. AI analyzes body movement to analyze gestural emotion. It uses skeletal motion to identify emotional cues. Advanced technology uses deep-sensing cameras and Graph Convolutional Networks (GCN) to interpret skeletal motion. Implementing a multimodal approach tracks facial and skeletal movement to analyze and provide a complete emotional profile. For instance, a person pointing to an object, nodding their head shows agreement.

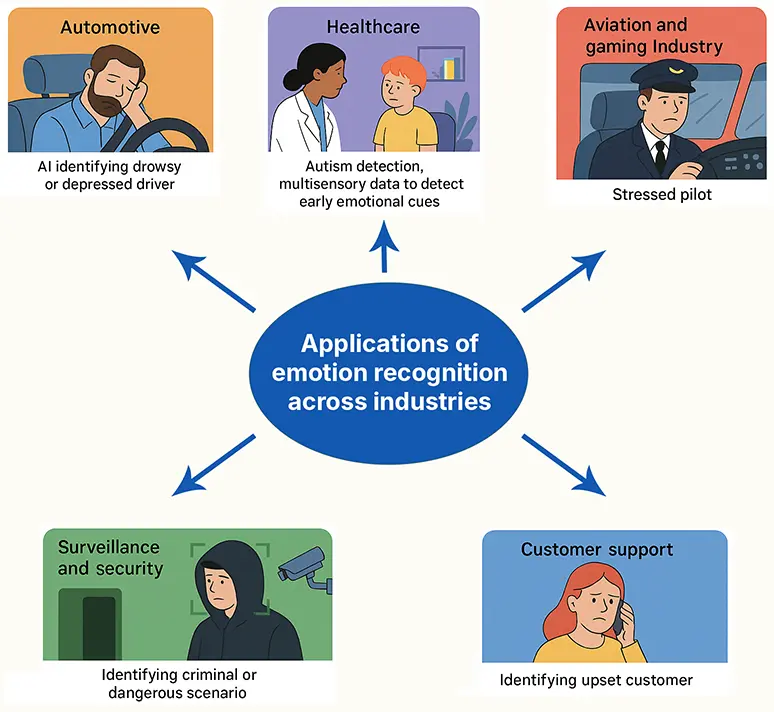

What are the applications of emotion recognition across industries?

Emotion recognition can be applied in various sectors as it offers a measurable impact. Below are the key areas where implementing emotion recognition can be helpful. Let’s explore:

-

Automotive

Implementing emotion recognition technology in the automotive sector aims to improve safety and driving experience. This technology identifies emotional and cognitive states such as sleepy, drunk or stressed. It uses facial recognition and eye tracking electrodermal activity sensors to monitor driver alertness in real time. With evolution in technology, autonomous vehicles personalize the inside environment of the car by transforming it into emotionally intelligent spaces.

-

Healthcare

Emotion recognition technology significantly impacts diagnosis and treatment of neurodevelopmental and mental health conditions. In autism detection, multisensory data is used to identify early emotional cues. Thus, enabling healthcare providers to quickly intervene during vital developmental stages. Emotion recognition is also used in mental health therapy for social anxiety. It provides suggestions to manage stress, making therapy more adaptive and accessible.

-

Aviation and gaming

Emotion recognition is used in training sessions to identify how individuals respond under pressure. Aviation industry uses it to detect cognitive or emotional state such as drowsiness or stress. This makes pilot training more targeted and effective. In gaming, real-time emotional tracking allows gamers to adapt automatically to a player’s mood. Thus, paving the way for immersive and responsive game environment.

-

Surveillance and security

Emotion facial recognition algorithms are applied to surveillance and security systems for better public safety. When the technology is implemented in public areas, it can anticipate possibly hazardous situations. Security personnel can remain alert and intervene actively before anything happens. This improves situational awareness and facilitates prompt response in a risky environment.

-

Customer support

Companies can find out the actual sentiment of customers by having emotion detection technology as part of their system. Using voice tones and facial expressions, companies can get an instantaneous indication of whether their customer is satisfied or frustrated. This enables organizations to offer tailored support, resolve issues faster and provide emotionally satisfying experience. As a result, emotional data can provide insights into customers’ buying behavior and areas of improvement.

What are the challenges in implementing emotion recognition?

Implementing emotion recognition comes with its own set of challenges that can affect the reliability, acceptance, and ethical use of technology. We have mentioned a few challenges that companies face along with their solutions.

-

Data privacy and ethical concerns

- Challenges: Emotional recognition uses vital biometric and behavioral data to identify emotional cues of individuals. This raises ethical and privacy issues. Misuse or overreach can erode the trust of customers and violate legal rules.

- Solutions: Sensitive data can be secured by implementing strong data security and governance policy and ensure compliance with GDPR. Obtaining user consent and transparently communicating purpose of data usage can build trust.

-

Inaccurate or biased predictions

- Challenges: Data biases and cultural difference can lead to misinterpreted emotions through AI system. Thus, implementing it into the real-world can result in system failure or unfair outcomes.

- Solution: Provide the AI model with continued training on diverse and real-world use cases. Moreover, organizations should incorporate bias detection tools and cross-cultural emotion benchmarks.

-

Multimodal data integration complexity

- Challenges: Combining data from all three features such as facial expression, voice and gestures can be technically complex and computationally intensive. Misalignment and misinterpretation between modalities can reduce emotion interpretation accuracy.

- Solutions: Use synchronized data capture techniques and algorithms designed for multimodal emotion analysis. Modular system design also helps isolate and fine-tune each input stream independently.

What are emotion recognition analysis technologies and tools?

Emotion recognition relies on advanced technologies that analyze human expressions, voice, and physiological signals to interpret emotional states. These tools combine AI, computer vision, and deep learning to deliver accurate, real-time insights across various applications.

- Facial Expression Recognition (FER): Uses computer vision and deep learning to detect emotional states from facial cues.

- Speech Emotion Recognition (SER): Analyzes vocal tone, pitch, and rhythm to interpret emotions from speech.

- Physiological signal analysis: Leverages data from heart rate, EDA, and EMG to identify emotional responses.

- Multimodal emotion recognition systems: Integrates facial, vocal, and bodily cues for higher accuracy in emotion detection.

- Graph Neural Networks (GNN) and deep learning models: Uses advanced emotion analysis for structured and unstructured data sources.

Embrace emotion recognition to improve real-time responsiveness

Emotion recognition is a powerful enabler of more intuitive, responsive, and human-centered technology. By adopting emotion AI, businesses gain deeper behavioral insights, deliver more personalized experiences, and respond with greater empathy and precision. The result is smarter decision-making, stronger engagement, and a competitive edge in today’s experience-driven world.

Now’s the time to act. Integrate emotion recognition into your digital strategy and utilize the full potential of emotionally intelligent systems.

FAQs

1. What are the features of emotion recognition?

Emotion recognition systems typically detect and interpret facial expressions, vocal tones, and physiological signals like heart rate or skin appearance. These features help identify emotional states such as happiness, anger, stress, or fear in real time. Advanced systems may also analyze gestures, posture, and eye movement for deeper emotional insights.

2. Why is it important to recognize emotions?

Recognizing emotions helps organizations better understand how people feel, think, and react in different situations. This insight can improve communication, strengthen relationships, and support smarter, more empathetic decisions. It enables systems to respond more naturally and effectively to human needs.

3. How can emotional recognition benefit businesses?

Emotion recognition helps businesses better understand how customers and employees feel in real time. This insight can improve customer experience, personalize marketing, and support more empathetic workplace interactions. Ultimately, it leads to stronger engagement and smarter decision-making.

4. What is a face emotion recognition system?

A face emotion recognition system uses artificial intelligence to analyze facial expressions and identify a person’s emotional state like happiness, anger, or surprise. It detects key facial features and interprets subtle muscle movements. This technology is widely used in areas like healthcare, security, marketing, and customer service to better understand human behavior.