Customer background

Our client is well known for its cutting-edge cancer treatment approaches built on a thorough knowledge of chromosomal instability (CIN). They use technology to identify cancerous cells more quickly. To address the vulnerability of cells, efforts are being made to develop and implement novel strategies.

Challenges

The company’s primary goal is to prevent cancer in its early stages.

“Early cancer detection and treatment can result in a very high cure rate, with an average five-year survival rate of over 90% in many cases,” according to the American Cancer Society.

The client was able to detect cancerous cells by studying the chromosomal instability (CIN) in images of the cells with the help of biologists. CIN is caused by chromosome segregation errors during mitosis, which results in structural and numerical chromosomal abnormalities.

However, the process of image analysis by biologists to determine whether a cell is cancerous or not is tedious, expensive and time-consuming. Therefore, our client was looking to speed up the process of detecting cancerous cells, provide accurate reports to their customers promptly and develop a cost-effective automatic model that was nearly as accurate as an expert’s diagnosis.

Solutions

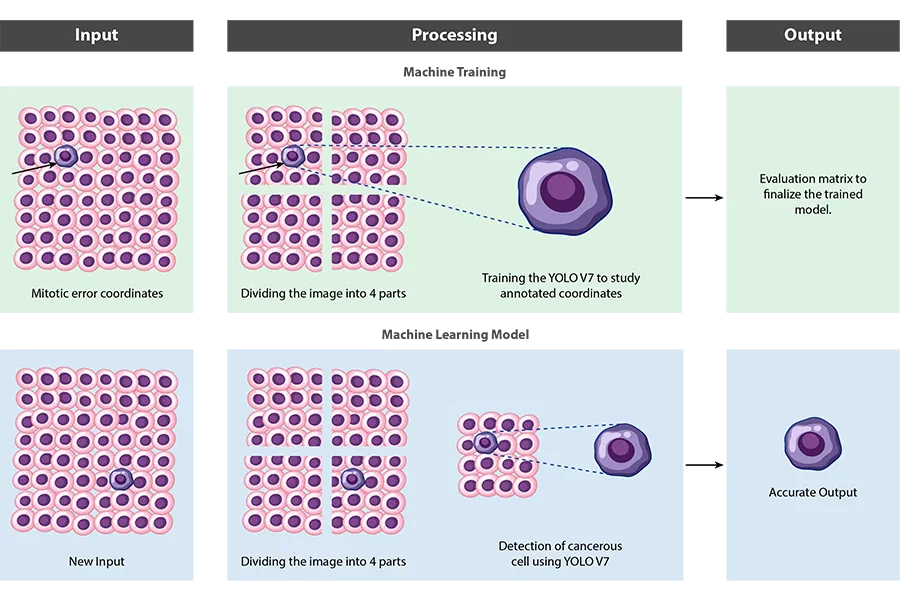

Our team carefully reviewed the client’s current operational procedures and assessed their needs. The biologists detected abnormalities in the blood cell reports and located the coordinates in each image. At a later stage, our machine learning experts used these coordinates to train the model for cancer detection using computer vision.

Once all the prerequisites were ready, the next step was to prepare the data to train the model. We began by feeding these images of varying resolutions and sizes annotated with coordinates into the system as part of the data labelling process.

The first challenge we faced was to reduce the time the system takes to process 4K images. To get around this, we divided the image into four parts and sent it along with the coordinates we received earlier for further processing. This step is performed to train the machine and instruct it on how to detect chromosomal abnormalities in blood cell images. If, on the other hand, the coordinates fall on the axis from where we are dividing an image, our expert team has created logic to handle that as well. This shifts the focus and highlight the abnormality.

Similarly, Softweb Solutions has designed similar logic to process images of 3K and 2K resolution.

Our team leveraged two algorithms for machine learning training:

- YOLO V7- “You only look once” algorithm, scans through the entire image in real-time just once to detect the objects, making it faster when it comes to processing speed.

- Centernet++- This algorithm detects objects with triplet key points namely top-left, bottom-right corners and the center key point. First, the algorithm groups the corners based on various cues and then confirms the objects based on the center key points. The approach makes this algorithm more accurate, however, it takes more time.

Our team chose the YOLO V7 model and modified it to make it more accurate. This was based on the client’s need to reduce processing time and create a more efficient model for cancer detection using computer vision.

We tested the model with different sets of images and compared the output to biologists’ diagnoses; the results were identical.

- 20%

cost savings by avoiding unnecessary biopsies

- 5X

cheaper than biologists

- 8X

lesser time consuming

cost savings by avoiding unnecessary biopsies

-

Industry

-

Technologies / Platforms / Frameworks

Python, PyTorch, Cloud- Azure, Databricks

-

Benefits

- Accurate cancer cell detection

- Reduced diagnosis time

- Accurate diagnosis in less than a minute

Decades of Trust & Experience

1630+

Projects

545+

Technocrats

26+

Products and Solutions

1020+

Customers

Similar Case Studies

Ensuring high quality packaging with computer vision

Improving security with computer vision for a facility management firm

Connect Now

Our experts would be eager to hear you.