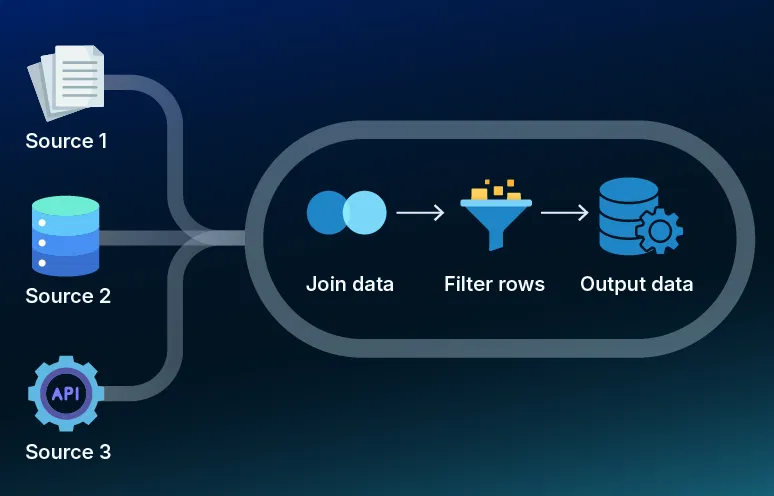

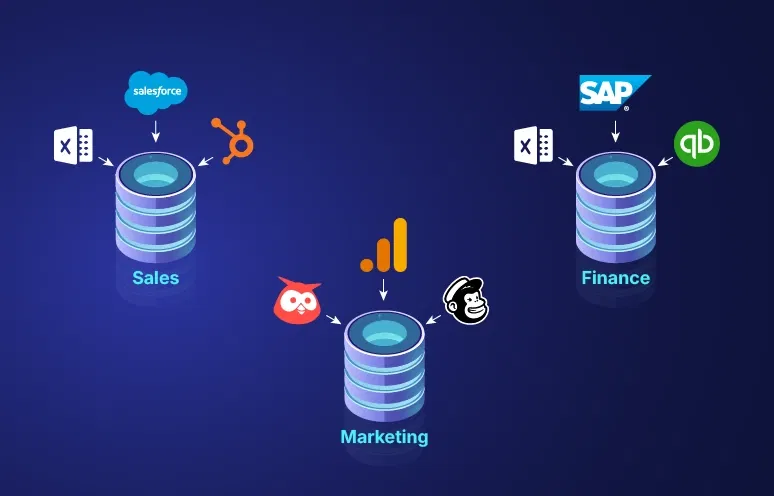

Organizations generate CRM records, transaction logs, operational records and legacy reports in parallel, with each source in its own format and continuously evolving. To generate dependable insights, you need a clear blueprint for how tables, attributes and relationships interconnect. The right data modeling techniques deliver that blueprint, enforcing consistency and preserving query speed as volumes grow.

Strong business data modeling bridges technical design with real objectives: more accurate forecasts, cleaner dashboards and decisions the entire organization can trust. Whether you’re building a new cloud data warehouse or refining an existing analytics stack, the modeling choices you make today affect maintenance effort and performance tomorrow.

This blog post explores seven traditional and modern data modeling techniques, highlights where each excels and explains real-world use cases alongside common supporting tools. You’ll also see how these techniques lay the groundwork for scalable, insight-ready architecture, often shaped through the practical expertise of data engineering consulting services.

What is data modeling?

Data modeling is the process of visually and structurally defining how data is stored, connected, and accessed within a system. It provides a clear framework that keeps data consistent, efficient and easy to understand across teams. For business functions, data modeling turns processes such as customer interactions, financial transactions and supply-chain events into structured schemas that mirror real-world operations.

Effective data models ensure that everyone, from technical teams to business users, works with the same definitions and relationships. They also serve as the foundation for designing databases, building analytics solutions, and ensuring data integrity as systems scale.

Types of data modeling

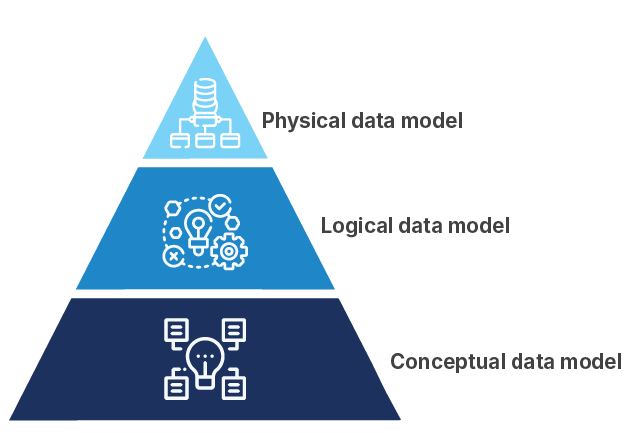

Before selecting a specific modeling technique, it’s important to understand the three core layers that shape most data architecture strategies. These data modeling concepts define the level of abstraction used to represent data and its structure:

-

Conceptual data model

This is the high-level view. It outlines key business entities and how they relate to one another without getting into technical detail. It’s designed for aligning stakeholders on the overall data vision.

-

Logical data model

This layer introduces more details, such as data attributes, types, and relationships. It remains technology-agnostic but supports deeper discussions between analysts, architects, and system designers.

-

Physical data model

This is the implementation blueprint. It maps data structures to actual database systems, including tables, columns, indexes, and storage details. It’s critical for performance tuning and platform-specific optimization.

Insight into all three layers empowers teams to craft systems that adapt to growth while keeping technical work focused on business outcomes.

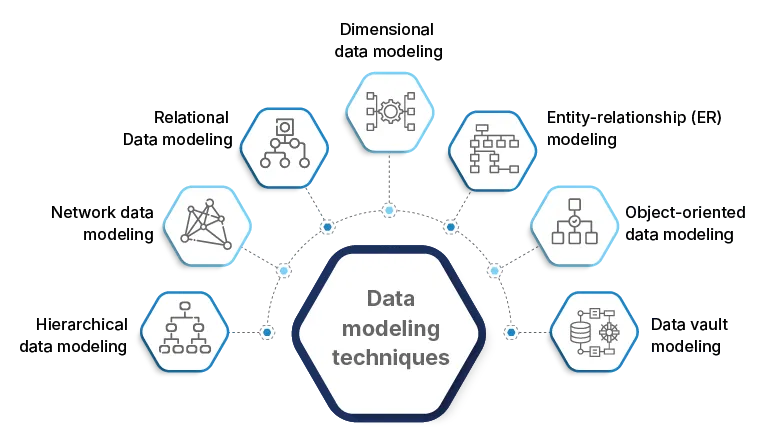

7 data modeling techniques and their applications

Each business scenario calls for a modeling strategy that excels under those specific conditions. When teams align methods to their use cases, they get architectures that scale predictably, deliver fast queries and keep data management clear. Below are some of the most effective data modeling techniques to consider:

1. Hierarchical data modeling

Hierarchical modeling arranges data in a strict parent-child hierarchy, similar to a tree structure. Each record has one parent and can have multiple children, making it straightforward to navigate for one-to-many relationships. It works well for scenarios where data hierarchy is fixed and predictable, such as organizational charts or file systems. While it offers fast retrieval along predefined paths, it can become inflexible when relationships change or expand beyond a single hierarchy.

- Good fit for: Data with fixed, predictable reporting lines or categories

- Use case: Customer account hierarchies in financial systems or product taxonomies in basic inventory applications

2. Network data modeling

Network data modeling emphasizes direct connections among entities, where each entity can have multiple parent and child relationships, creating a dynamic and interconnected structure. This approach handles complex many-to-many relationships and performs well in environments like interconnected service offerings or multi-agent systems.

- Good fit for: Environments with multi-directional relationships

- Use case: Telecom billing systems or logistics networks where entities connect in multiple ways

3. Relational data modeling

Relational modeling uses tables to store entities, with primary and foreign keys defining relationships. This standardized approach supports normalization, ensuring minimal redundancy and strong data integrity. It is the foundation for most modern transactional and analytical systems and integrates well with SQL-based querying. Its flexibility allows schema evolution and robust support for ACID transactions.

- Good fit for: Transactional systems that require data consistency and flexibility

- Use case: Customer management, order processing, financial records supporting data science development

4. Dimensional data modeling

Dimensional modeling organizes warehouse data into two table types: fact tables for metrics and dimension tables for context. This design optimizes queries by separating measurements from descriptive information, making reports and dashboards faster and easier to build. By clearly defining how facts and dimensions relate, dimensional models simplify data retrieval and support scalable analytics.

- Good fit for: Data warehouses and BI platforms focused on analytics speed

- Use case: Creating data warehouses and data marts for business intelligence and analytics

5. Entity-relationship (ER) modeling

Entity-relationship modeling creates diagrams to represent entities, attributes and relationships in a logical framework. It helps stakeholders and developers agree on data requirements before implementation. ER models detail cardinality and optionality, ensuring clarity in how entities interact. This approach lays a solid foundation for both relational and NoSQL implementations. ER models create a clear map of how data entities interact, making it easier to design insightful dashboards. That’s where data visualization consulting services can help translate your ER diagrams into interactive, business-ready reports.

- Good fit for: Early-stage database design and requirement gathering

- Use case: Collaborative design of customer, order and inventory systems with clear entity relationships for dashboard development

6. Object-oriented data modeling

Object-oriented modeling treats data as objects that combine attributes and behavior. It mirrors object-oriented programming principles such as inheritance and encapsulation. This technique aligns closely with application code, reducing impedance mismatch and streamlining development. It also supports complex data types and relationships, making it suitable for rich domain models.

- Good fit for: Banking platforms handling complex transactions and managing customer accounts

- Use case: Content management systems or product configuration platforms with complex entities

7. Data vault modeling

Data vault modeling separates raw data (hubs), relationships (links) and descriptive history (satellites). It emphasizes auditability and change tracking, allowing integration of data from multiple sources without altering existing structures. Vault models support parallel loading and historical versioning, making them ideal for large-scale enterprise data warehouses.

- Good fit for: Enterprise data integrations that merge legacy and modern systems

- Use case: Financial reporting platforms or healthcare data repositories where audit trails are mandatory

Modeling techniques: tools and core advantages

| Technique | Common tools used | Core advantages |

|---|---|---|

| Hierarchical | IBM IMS, Windows Registry, XML-based systems | Fast retrieval for fixed hierarchies |

| Network | IDMS, TurboIMAGE, CA-IDMS | Flexible many-to-many relationships |

| Relational | MySQL, PostgreSQL, SQL Server, Oracle Database | Strong consistency and SQL support |

| Dimensional | Amazon Redshift, Snowflake, Microsoft SSAS, dbt | Efficient analytics and user-friendly schemas |

| Entity-relationship | Lucidchart, ER/Studio, Oracle Data Modeler | Clear requirement mapping and logic diagrams |

| Object-oriented | UML tools, Visual Paradigm, IBM Rational Software Architect | Seamless integration with OOP code |

| Data vault | WhereScape, VaultSpeed, Datavault Builder | Auditability and historical tracking |

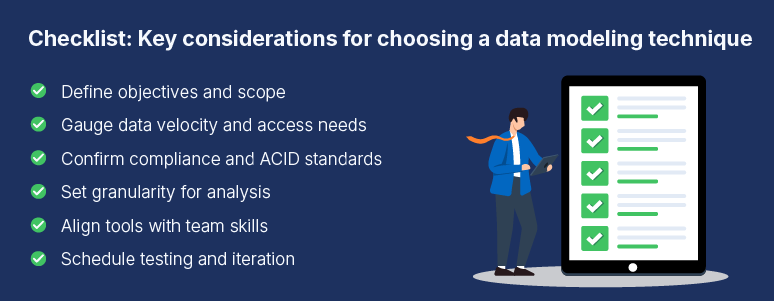

How to choose the right data modeling technique

A data model must support both your current use cases and the volume of data you expect to handle in the future. Consider these key factors when evaluating approaches:

- Purpose and scope: Define project objectives and business scope, from departmental initiatives to organization-wide programs.

- Data characteristics: Assess speed and frequency of data creation, modification, and retrieval requirements.

- ACID compliance: Ensure atomicity, consistency, isolation, and durability standards are met for data integrity.

- Data granularity: Determine the level of detail or aggregation needed for different reporting and analytical use cases.

- Technical environment: Review existing tools, platforms, and team expertise to support the selected model.

- Refinement and validation: Plan for ongoing testing, feedback cycles, and iterative adjustments after initial deployment.

Outline your path to smarter data

You’ve explored seven data modeling techniques and seen how each aligns with different environments, goals, and performance needs. Now it’s time to assess your own data landscape: map out key entities, choose the approach that fits your use cases, and build a proof-of-concept model. Running a small pilot will help you validate assumptions, refine your design, and set the stage for broader adoption.

Partnering with the right experts can accelerate the journey and ensure best practices from day one. At Softweb Solutions, our data analytics services team brings deep modeling experience and proven frameworks to every engagement. Let’s connect to discuss your priorities and chart a clear path toward a scalable, insight-driven architecture.