Can machines be trained to interpret the world as intuitively as we do? As computer vision races toward new milestones in 2026, the answer is an emphatic yes.

Today, computer vision services are moving beyond simple recognition. The technology helps with real-time processing, predictive insights, and adaptive learning across diverse sectors. Behind these advancements are emerging trends that take computer vision to new heights.

This blog explores the top trends in computer vision and machine vision that will shape 2026 and the future beyond.

1. Edge computing: Powering real-time decision making

Edge AI offers real-time processing. The technology enables data processing at the source instead of centralized cloud system. This is essential for applications requiring immediate responses, like autonomous driving, real-time surveillance, and industrial automation.

-

Enhanced processing speed

With edge computing, data is processed directly on devices. This minimizes latency and accelerates decision making in critical applications such as self-driving cars and smart city infrastructure.

By 2026 the utilization of computer vision in autonomous vehicles is going to reach $55.67 billion at a CAGR of 39.47%. – ResearchAndMarkets

-

Reduced bandwidth and costs

By reducing reliance on cloud storage, edge AI decreases bandwidth needs and operational costs. This makes computer vision more efficient and sustainable.

-

Data privacy and security

Processing data locally strengthens privacy protections by keeping sensitive data on the device, crucial for sectors like healthcare and finance.

-

Scalability for large scale deployments

AI in edge computing enables scalable computer vision solutions. It helps distributing processing across multiple devices rather than relying on a single central server. This enhances efficiency and allows widespread deployment without overloading a central system.

Edge computing offers more reliable, efficient, and secure computer vision solutions for industries where speed and data privacy are paramount.

2. AI-enhanced vision models: Deep learning’s expanding role

AI is the engine behind computer vision’s evolution, particularly through deep learning, transformers and convolutional neural networks (CNNs). These technologies make it possible to analyze and interpret complex visual data with unprecedented accuracy.

In 2028, the expected market size of AI in computer vision is going to reach $45.7 billion at a CAGR of 21.5% from 2023 to 2028. – GlobalNewsWire

-

Improved pattern recognition

New neural architectures, such as Vision Transformers, can interpret intricate patterns and features in visual data. They are useful in applications like facial recognition and anomaly detection.

-

Increased computational efficiency

Optimization techniques and AI-powered hardware accelerate the processing power of neural networks. This enables real-time analysis and reduces energy consumption.

-

Scalability across devices

Neural network advancements enable AI to scale across diverse devices from mobile phones to industrial robots. This enhances adaptability across industries.

AI-powered machine vision models will grow smarter and become more adaptable. They will create new possibilities for precision and efficiency in real-world applications.

3. Hyperspectral imaging and multispectral analysis

Hyperspectral imaging captures data beyond visible light. It spans a wide range of wavelengths, offering detailed insights. This helps in fields like precision agriculture, healthcare, and environmental monitoring.

-

High-resolution data

It detects subtle differences in materials, which is essential for precision agriculture, where factors like crop health depend on such insights.

-

Medical and diagnostic applications

Hyperspectral imaging aids in identifying tissue abnormalities and early disease signs with high accuracy.

Computer vision in healthcare market was valued at USD 1 billion in 2023 and is expected to grow at a CAGR of 34.3% between 2024 and 2032. – Global Market Insights

-

Environmental monitoring

Industries use it to assess air and water quality, helping manage environmental impact effectively.

The use of hyperspectral and multispectral imaging will expand across diverse industries. It will enhance decision making capabilities with rich and actionable data insights.

4. Neuromorphic vision sensors

Neuromorphic vision sensors simulate human vision by only capturing changes in a scene rather than full frames. This approach is ideal for applications that need rapid visual processing. This includes applications such as robotics and autonomous systems.

By the end of 2028, the surveillance security computer vision application is predicted to grow at a CAGR of 22%. – Cloudfront

-

Event-based processing

By logging only changes, neuromorphic sensors improve processing speed and reduce power consumption.

-

Lower energy consumption

Selective data capture enables these sensors to run efficiently, a key benefit for wearable devices and drones.

-

Enhanced real-time responsiveness

These sensors allow autonomous systems to react instantly, ideal for robotics and smart infrastructure.

Neuromorphic sensors will redefine the capabilities of vision systems, particularly in high-speed environments. They will offer energy-efficient, real-time solutions across industries.

5. 3D computer vision and LiDAR integration

3D computer vision, often using LiDAR technology, is essential for spatial awareness and mapping. This is particularly used in sectors like automotive, logistics, and urban planning.

-

Detailed spatial mapping

LiDAR offers precise 3D mapping for applications such as autonomous vehicles, which rely on accurate environmental models.

-

Enhanced object detection

3D vision enables better recognition of complex scenes, increasing safety in autonomous navigation.

-

Applications in AR and VR

It’s also foundational for augmented and virtual reality, powering immersive and interactive experiences in retail and entertainment.

According to the research of AI Accelerator Institute as of 2023, two of the most used trends share are merged reality (7%), and facial recognition (6%).

LiDAR and 3D vision integration will continue to revolutionize industries requiring precise spatial awareness, from autonomous navigation to immersive experiences in AR/VR.

6. Event-based vision

It processes only the dynamic changes in a scene. This makes it especially useful for applications that need to handle high-speed, rapidly changing environments.

-

High-speed adaptability

Event-based technology is ideal for fast-paced scenarios like sports analytics and automated manufacturing.

Suggested: AI visual inspection for manufacturing

-

Efficient data handling

By capturing only key movements, it reduces data overload, improving processing speeds.

-

Optimized for real-time responses

Event-based vision is invaluable for applications that need instant feedback, such as security systems and autonomous drones.

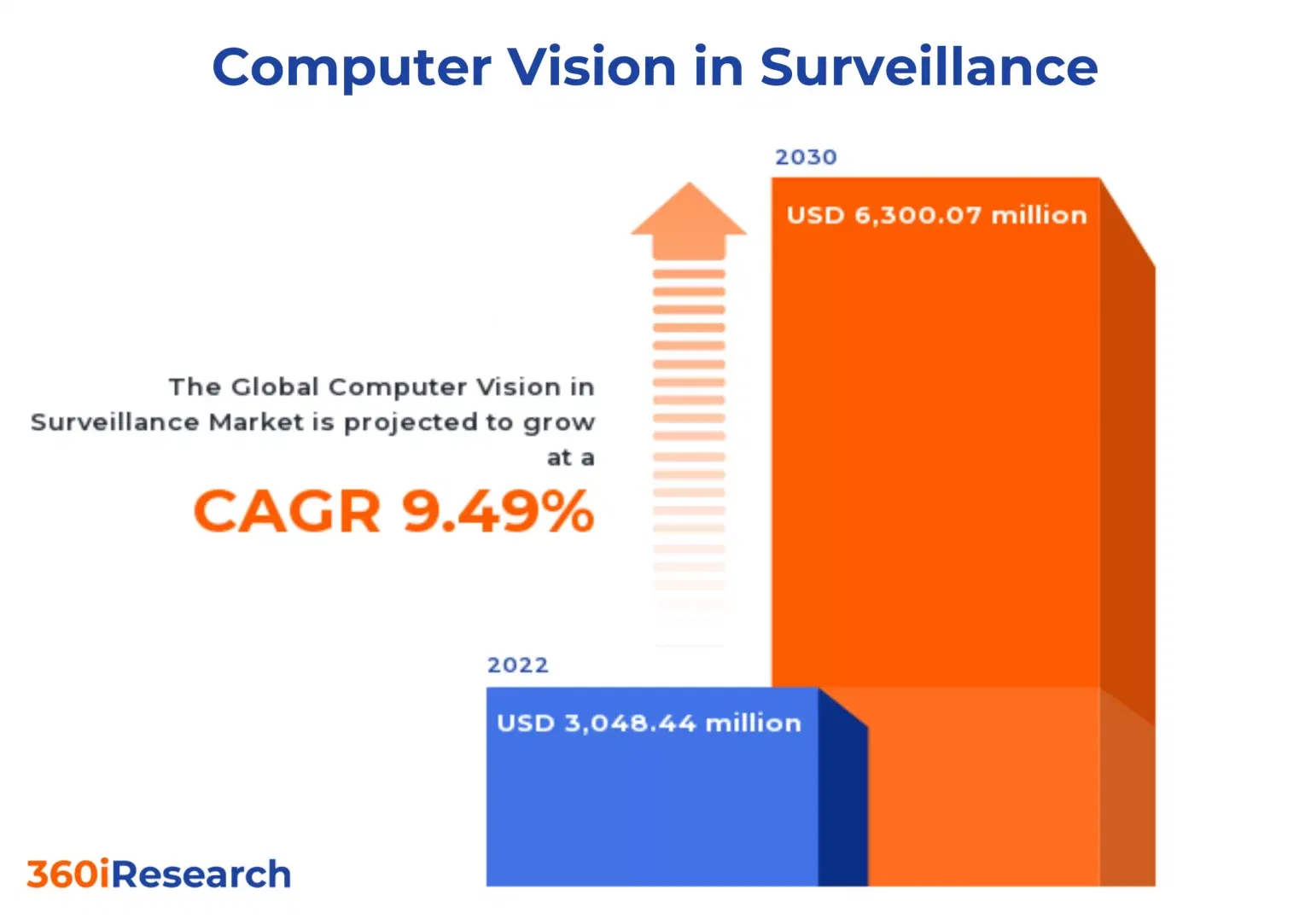

Source: 360iResearch

Event-based vision will become indispensable in industries where fast, efficient data processing is essential. It will provide real-time insights for dynamic environments.

7. Generative AI services for computer vision

Generative AI in computer vision involves using algorithms to create or synthesize new images and visual data. This can be used in sectors such as media, retail, and healthcare. GenAI enables the creation of realistic simulations and synthetic datasets.

According to the research of AI Accelerator Institute as of 2023, one of the most used trend share is Generative AI (76%).

-

Data augmentation and synthesis

Generative AI can produce large volumes of synthetic data to train computer vision models. This helps in addressing data scarcity in sensitive fields like healthcare.

-

Image and video generation

GenAI can create realistic animations, backgrounds, and even entire scenes, reducing production costs.

Suggested: 5 tips to implement generative AI in your organization

-

Applications in simulations and testing

In automotive and robotics, generative AI helps simulate challenging conditions. It helps improve the reliability of autonomous systems.

Generative AI will enhance creative and practical applications in computer vision, from content generation to improving the accuracy of vision models with synthetic data.

8. Multimodal AI models

Multimodal AI integrates data from multiple sources, such as text, images, and audio. It helps produce more holistic and accurate results. This approach is especially useful in enhancing AI’s contextual understanding and enabling more sophisticated applications.

-

Enhanced contextual understanding

By combining text, visual, and audio data, multimodal AI improves the ability to interpret complex scenarios. This can be especially used in scenarios such as medical diagnostics where various types of data are considered.

-

Cross-platform applications

Multimodal AI enables seamless transitions between devices and platforms, from smartphones to industrial systems, making it highly versatile.

-

Efficient computer-human understanding

This technology allows for applications such as AI assistants that can process both voice and visual commands, or retail platforms that recommend products based on images and reviews.

-

Cross-modal retrieval and analysis

In fields like e-commerce and social media, multimodal AI enables users to search or organize information using various input modes. For example, a user could search a database using both keywords and images to find visually similar products. This helps in streamlining the search process and improving customer satisfaction.

44% of retailers are using AI-powered computer vision for better customer experience. – PwC

9. Designing vision systems with privacy at the core

Privacy has become a defining factor in how computer vision systems are designed. Visual data often contains information that identifies people or private locations, so organizations now need approaches that respect both analysis goals and individual rights.

-

Anonymization for safer analysis

Visual data frequently contains identifying details that serve no analytical purpose but create legal and ethical exposure. A traffic monitoring camera, for example, needs vehicle count and flow patterns, not the faces of pedestrians.

-

Anonymization techniques address such gaps by:

- Blurring or masking faces before storage

- Reducing identifiable details unless required for a compliance-driven task

- Removing background elements that reveal private locations

-

Compliance supported by RegTech tools

Regulations across major markets now require organizations to demonstrate how AI systems collect consent, make decisions, and move data across borders. Manual tracking of such requirements becomes impractical as vision deployments scale across regions and use cases. RegTech platforms solve operational complexity by embedding consent management, decision audit trails, and data flow controls directly into vision pipelines.

-

Synthetic data for secure model training

Real-world datasets often include sensitive information or lack the variations needed for robust training. Relying only on such data creates both privacy risks and model limitations. Synthetic data generated through controlled simulations or generative models provides a safer alternative because no personal identity is involved and rare situations can still be represented.

Organizations that embed anonymization, RegTech, and synthetic data into their workflows early will face fewer regulatory obstacles and earn stronger user trust as adoption scales.

10. Build vs. buy: Choosing the right adoption path for computer vision

Teams exploring computer vision balance two priorities: achieving quick wins to validate the idea and building deeper control for long-term use. The choice between ready-made solutions and custom development becomes clearer as the organization evaluates cost, scalability, and ownership expectations.

-

APIs for fast experimentation

Many teams prefer buying ready-made APIs when they need a quick way to explore a use case or demonstrate early results. Immediate deployment, minimal setup, and predictable pricing make APIs useful for testing ideas without heavy investment.

-

Ready-made APIs are most practical when:

- Use cases involve common tasks like object detection, text recognition, or face identification

- Internal ML expertise is limited or still developing

- Budgets restrict investing in dedicated infrastructure

- Release timelines require a working solution within weeks rather than months

-

Custom models for specific requirements

General-purpose APIs perform well for common tasks but may miss subtle variations in specialized domains. A quality inspection system for electronics manufacturing, for example, must detect defect types that generic models have not been trained to interpret.

-

Custom development becomes the stronger choice when:

- Objects or conditions are unique to the organization

- Accuracy requirements exceed what standard models deliver

- Data sensitivity prevents sending images to third-party services

- Processing volumes reach a scale where API costs exceed in-house infrastructure

-

Blended strategies for scalable growth

The decision between APIs and custom models does not need to be final at the beginning of the project. Many organizations start with APIs to prove value and gather real performance data, then invest in custom components only where gaps justify the effort.

-

A phased approach works well because:

- APIs generate labeled data that later trains custom models

- Teams learn which tasks need specialization before committing resources

- Hybrid architectures allow APIs for routine work and custom models for high-value edge cases

A thoughtful adoption path lets vision capabilities grow alongside business needs, avoiding both premature over-investment and the limitations of staying locked into generic solutions.

As multimodal AI models advance, they will redefine how AI systems interact with human users. They will create more intelligent, efficient, and versatile applications across industries.

Leading the way in AI-powered vision systems

The landscape of computer vision and machine vision will be reshaped by these groundbreaking trends of 2026. The convergence of these innovations promises new possibilities across industries. Organizations that adopt these technologies can drive efficiency and precision, redefine user experiences, and pave the way for smarter, more intuitive systems.

At Softweb Solutions, we understand the profound impact of these emerging technologies. Our computer vision experts ensure that you leverage these advancements for competitive advantage. Integrate these powerful technologies into your operations by partnering with us. Let’s connect to discuss your unique business case.

FAQs

1. What are the future predictions for the vision markets in the next five years?

Computer vision trends in the next five years will focus on making vision systems faster, more context-aware, and easier to deploy at scale. Edge AI will become the default for many real-time use cases, with more on-device inference, tighter hardware and model co-optimization. Vision models will also move from single-task detection to multimodal and agent-assisted workflows that combine images with text, audio, and sensor data to enhance decision making process.

2. How is AI impacting the growth of the machine vision industry?

AI in machine vision is improving the way systems detect, classify, and localize objects by learning visual patterns instead of relying on manually defined rules. Deep learning and transformer-based architectures are improving performance on complex tasks like defect detection, fine-grained classification, and anomaly detection where traditional methods struggle. As a result, machine vision systems are becoming more flexible and easier to deploy across different products, lighting conditions, and environments.

3. Why is edge computing important for modern computer vision systems?

Edge computing is essential for modern computer vision systems because it enables real-time data processing at the source rather than relying on distant cloud servers. Handling visual data locally reduces delay to almost zero, which is essential for autonomous vehicles, industrial machinery, and security systems where quick, split-second decisions are required for safe and accurate operation.

4. What is multimodal AI and how does it relate to computer vision?

Multimodal AI is the ability of a model to learn from and reason across multiple data types, such as images, text, audio, and sensor signals. In computer vision, it helps systems connect what they see with what they read or hear, which improves context for tasks like visual search, diagnostics, and assistant-style experiences. As a result, vision becomes less about detection only and more about decision support.

5. Which industries are being transformed by computer vision in 2026?

Computer vision is transforming multiple industries in 2026. Healthcare uses it for diagnostics and early disease detection through imaging analysis. Automotive deploys it for autonomous navigation and driver assistance systems. Retail uses AI-powered vision for customer experience and inventory management. Manufacturing employs visual inspection for quality control. Logistics benefits from automated sorting and warehouse optimization.

6. What tools or libraries are recommended for feature extraction in machine learning?

Common tools and libraries for feature extraction in machine learning include Scikit-learn provides PCA, t-SNE and feature tools. TensorFlow and PyTorch enable deep learning-based extraction. OpenCV delivers traditional image feature algorithms. TSFresh automates time series extraction. Feature tools handles automated feature engineering on relational data. Pandas supports data transformation and basic feature creation.

7. What are the latest advancements in computer vision for logistics?

Computer vision in logistics is advancing through higher-accuracy parcel identification, automated sorting, and warehouse perception for robots and safety systems. Recent work also groups progress around mobile robots, drones, camera networks, forklift automation, robotic arms, and smart glasses for warehouse execution. The result is faster throughput and fewer manual verification steps in high-volume operations.