RAG as a service

We connect your LLMs with external knowledge bases to deliver accurate and context-aware responses that scale with your business needs.

Get started with RAGOur Clients

We specialize in building solutions that seamlessly connect large language models with structured and unstructured data. Our RAG-as-a-Service enables smarter search, accurate summarization, and reliable content generation. We develop algorithms that integrate advanced retrieval methods, semantic search, and REFRAG acceleration, enabling your LLMs to deliver highly relevant and accurate responses at scale. As a leading RAG development company, we stand out for turning complex AI challenges into scalable solutions. This helps organizations gain insights, improve decision-making, and stay ahead of the competition. Our RAG services ensure:

Our team specializes in structuring external data inputs for optimal retrieval. We ensure LLMs and RAG models retrieve accurate data and generate precise and context-aware responses.

Our RAG developers focus on designing high-performance retrieval systems that deliver fast and accurate information. By structuring intelligent query processing and using semantic search, we ensure your applications generate relevant information.

Our team creates information retrieval algorithms that improve the way your systems search and retrieve data. We apply semantic embeddings and context-aware indexing that conduct faster searches and deliver smarter results.

We deliver enterprise-grade RAG model integration by combining semantic search and vector databases decoding. We use advanced retrieval mechanisms, knowledge base alignment, and integrate REFRAG so that models respond faster and accurately.

We implement LLM prompt augmentation systems that inject relevant context from retrieved data into prompts, ensuring precise, domain-specific responses. Our team integrates real-time retrieved data, fine-tunes prompts, and embeds contextual markers for accuracy.

We focus on ongoing evaluation and improvement to keep your RAG models reliable. Our team continuously evaluates model performance, analyzes retrieval accuracy, and integrates user feedback for improvements.

We deliver consulting and support services that align retrieval technology with your business goals. From architecture planning and knowledge base refinement to scalability strategies, our consultants ensure consistent accuracy and efficiency.

Our team builds domain-specific knowledge bases, helping organizations convert scattered data into structured, retrievable assets. The process includes data curation, knowledge graph design, and integration with retrieval pipelines to maximize AI accuracy.

Our team develops multimodal RAG algorithms that integrate text, images, and audio into retrieval pipelines to create context-aware responses. Using NLP, computer vision, and speech recognition, our engineers create pipelines that expand search capabilities and improve accuracy.

Our team develops domain-focused Retrieval-Augmented Generation (RAG) systems, tailoring outputs to the language, compliance, and workflows of your industry. From healthcare diagnostics to e-commerce personalization, our retrieval algorithms are designed to process domain knowledge with precision.

Our RAG solutions use Meta’s REFRAG framework to process only relevant data. By integrating REFRAG, your organization can turn data into measurable business value.

Connecting value chain across technology and business

Our team helps unify scattered data into a single, searchable knowledge hub, making information easy to search and access. This streamlined approach eliminates time spent on manual searches and delivers consistent insights that support better engagement and faster decision-making across teams.

Our RAG-powered AI ensures precise and fact-driven insights by retrieving real-time, domain-specific data. By reducing errors and eliminating irrelevant outputs, our service increases user confidence, enabling your organization to adopt RAG solutions that consistently deliver trustworthy and relevant insights.

We enhance large language models with RAG to produce context-rich and accurate outputs. By embedding real-world context into every interaction, our service helps businesses engage users more effectively, improve support interactions, and drive better outcomes through reliable, knowledge-driven AI responses.

Our team enhances user experience by delivering case-specific and context-aware responses. Analyzing user behavior and preferences, our solutions provide personalized responses, streamline interactions, and ensure accuracy. This fosters smooth and engaging conversations that enhance customer satisfaction and build long-term trust.

Our team helps you cut down training and infrastructure costs with RAG. By reducing repetitive tasks and minimizing errors through automation, we optimize resources and improve efficiency. Moreover, we ensure your systems deliver value without increasing operational or maintenance expenses.

Our team helps you scale faster by eliminating repeated model retraining. This approach accelerates deployment, ensures flexibility and sustainable efficiency, enabling your business to adapt to evolving needs with ease and precision.

Our team helps you implement domain-specific RAG solutions, ensuring accuracy, scalability, and real business impact.

Build RAG with our experts

Words that motivate us to go above and beyond! A glimpse of our customers who make us shine among the rest.

Our Retrieval-Augmented Generation (RAG) services transform industry data into business value. We integrate unstructured data sources, provide real-time insights, and enable smarter decision-making. Businesses can enhance operations, reduce errors, improve compliance, personalize customer experiences, and scale growth with confidence.

Request a use case demoWe help manufacturers connect production data with external knowledge to improve decision-making, speed, and consistency. This enhances efficiency, reduces errors, and ensures agile production at scale.

Key solutions include:We support logistics providers with real-time intelligence and agility by aligning fleets, warehouses, and vendors in real-time. By connecting external and enterprise data, RAG-powered systems anticipate demand shifts, route bottlenecks, and compliance needs with precision.

Key solutions include:We help semiconductor fabs gain agility, reduce cycle time, improve efficiency, and regulate control by embedding RAG into workflows. Our teams integrate processed data, equipment logs, and inspection reports into a unified knowledge layer, enabling engineers to resolve issues faster and avoid costly delays.

Key solutions include:We help telecom operators modernize operations, automate service management, and improve customer loyalty through RAG-enhanced solutions. By linking subscriber data, billing records, and infrastructure logs, we deliver actionable insights that reduce churn and drive operational efficiency.

Key solutions include:We deliver finance-focused RAG services that mitigate financial risk, monitor transactions, and improve portfolio performance with RAG. By linking structured and unstructured financial data, we provide contextual insights that enhance operational efficiency, reduce risk, and improve client trust.

Key solutions include:Our RAG experts integrate retrieval pipelines, optimize knowledge bases, and accelerate LLMs with REFRAG, enabling businesses to scale faster with accurate, real-time intelligence.

Talk to our RAG expertAs a leading RAG development company, we design processes that align every step with business outcomes. From data preparation to fine-tuning, our process ensures accurate, efficient, and future-proof retrieval-augmented generation systems.

Our process starts with understanding your goals and available data. We collaborate with your team to define objectives, gather the right data sources, and ensure RAG-as-a-Service delivers measurable and reliable results.

We ensure your data is clean, organized, and aligned to your objectives, setting up scalable results from the start. This step ensures your AI receives the right context for accurate retrieval and meaningful content generation.

We prepare robust retrieval pipelines that filter, structure, and deliver accurate external data to your LLM. This helps your business access precise, relevant information quickly while eliminating irrelevant data and improving decision-making.

Our team connects LLMs to your RAG system through structured data pipelines and optimization. From setup to optimization, we ensure your RAG system handles data reliably, delivering accurate and context-aware responses every time.

Constant training keeps your RAG system aligned with your goals. We fine-tune data prompts and monitor outputs to improve response accuracy. This makes the system grow smarter and more effective with every interaction.

We will stay by your side after launching the RAG system. With dedicated support, we provide round-the-clock support, regular updates, and proactive improvements, so your system keeps evolving alongside your business needs.

10+ years of experience in building AI-driven knowledge systems for finance, healthcare, manufacturing, and technology enterprises

60+ AI specialists including LLM engineers, vector database experts, and retrieval optimization architects

Expertise in integrating RAG workflows with Azure OpenAI, AWS Bedrock, Google Gemini, and custom on-prem setups for secure, scalable deployments

Well equipped with cutting-edge RAG solutions to boost the efficiency of LLM models

Proven success in real-world use cases such as compliance copilots, domain-specific Q&A, enterprise knowledge retrieval, and intelligent document search

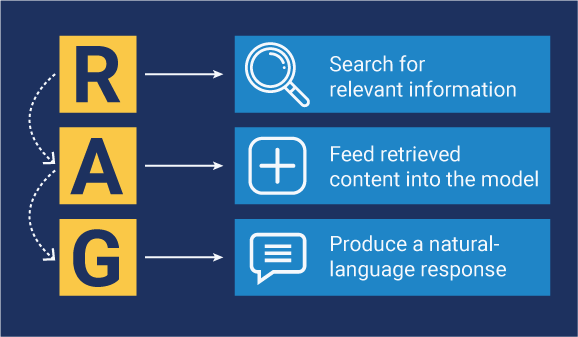

RAG is an AI approach that combines large language models with a retrieval mechanism to provide context-aware outputs. It retrieves relevant information from external data sources before generating responses. This ensures answers are accurate, up-to-date, and grounded in real knowledge.

RAG-as-a-Service is a managed solution that combines large language models (LLMs) with real-time data retrieval. It delivers retrieval-augmented generation capabilities via cloud-based platforms or APIs. Moreover, it allows businesses to access scalable, real-time AI without building the infrastructure in-house. The service handles data retrieval, model integration, and performance optimization.

By integrating external data retrieval, RAG provides language models with relevant context. This reduces hallucinations and improves response accuracy. It also allows the model to handle larger, domain-specific knowledge efficiently.

Yes, RAG solutions can be tailored for industry-specific data, workflows, and regulatory standards. Customization includes selecting data sources, fine-tuning retrieval algorithms, and adjusting output formats. This ensures the system meets specific business needs.

LLMs generate text based on training data alone, while RAG combines LLMs with a retrieval system to access real-time external knowledge. RAG improves accuracy, relevance, and context-awareness. Essentially, RAG augments the LLM with up-to-date information.

A common example is an AI-powered enterprise knowledge assistant. It retrieves company documents or manuals to answer employee queries accurately. Another example is a domain-specific chatbot delivering real-time customer support.

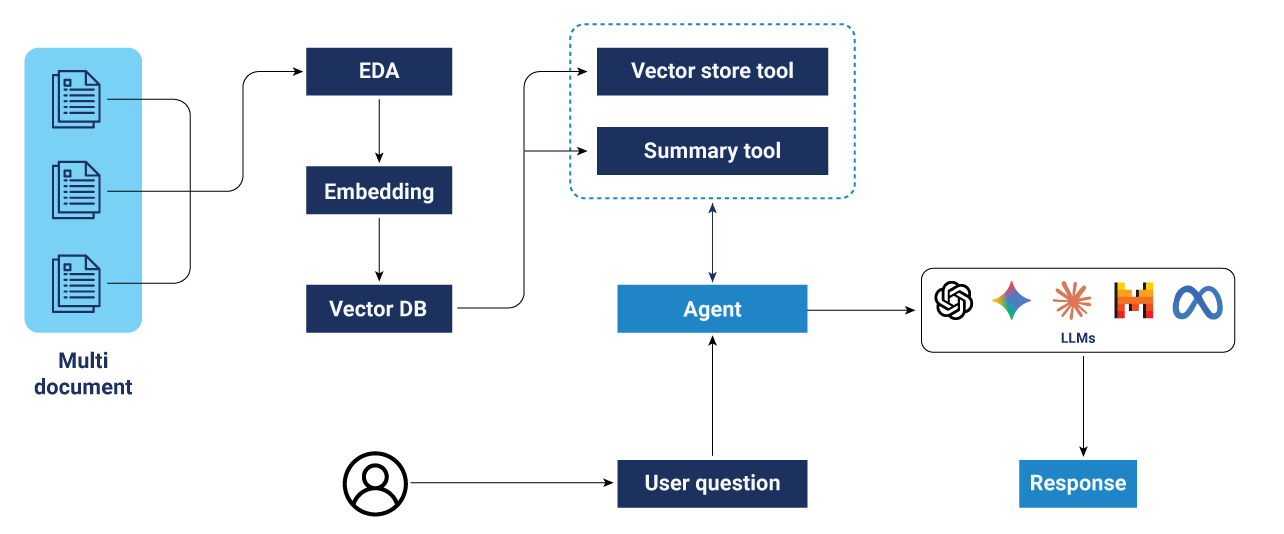

RAG can be connected via APIs or embedded into workflows using orchestration frameworks like LangChain or LlamaIndex. It can work alongside LLMs, CRMs, or analytics systems. Integration ensures seamless knowledge retrieval and generation in your current infrastructure.

Yes, LLMs can generate responses independently. However, without RAG, they rely solely on pre-trained knowledge and may produce inaccurate or outdated outputs. RAG enhances a LLM’s efficiency with external, real-time data.

RAG can be pulled from internal databases, document repositories, CRM systems, knowledge bases, APIs, and web sources. It supports both structured and unstructured data. This flexibility ensures context-rich, accurate outputs.

RAG performance depends on the quality and relevance of the retrieved data. It may have higher latency due to retrieval processes. Additionally, implementing RAG requires careful configuration and maintenance to avoid errors or outdated responses.

Costs vary based on data volume, model size, API usage, and infrastructure requirements. Small-scale deployments are affordable, while enterprise-grade systems with high throughput and storage needs scale higher. A consultation with an RAG development services provider can help estimate precise costs.

Go from scattered data to real-time insights in a few months

We seamlessly unify fragmented data, enabling real-time insights and measurable business outcomes within months.